Tutorial: Shaping Host System Metrics¶

This tutorial models several metrics found in the Host monitoring app. While not as comprehensive as the entire app, it covers the process of working with metric data in a way you can follow in your workspace.

To follow this tutorial, you need the following:

One or more hosts sending data to the app

If you don’t already have the Host monitoring app installed, see Observe apps for more about installing apps in your workspace.

To understand more about OPAL, read Introduction to Metrics for metric basics and OPAL — Observe Processing and Analysis Language.

Identifying the Data of Interest¶

The Host Monitoring app ingests observations into the Host data stream dataset. For this example, you use it to create two metrics, free disk space and percent disk used. Telegraf forwards these metrics from the source hosts.

Open a new worksheet for the Host dataset.

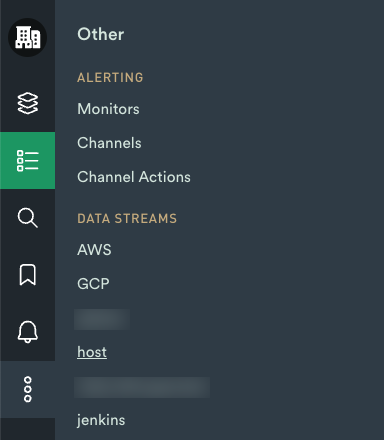

In the Other menu in the left menu, select the Host data stream to open the dataset.

Figure 1 - Opening the “host” data stream.

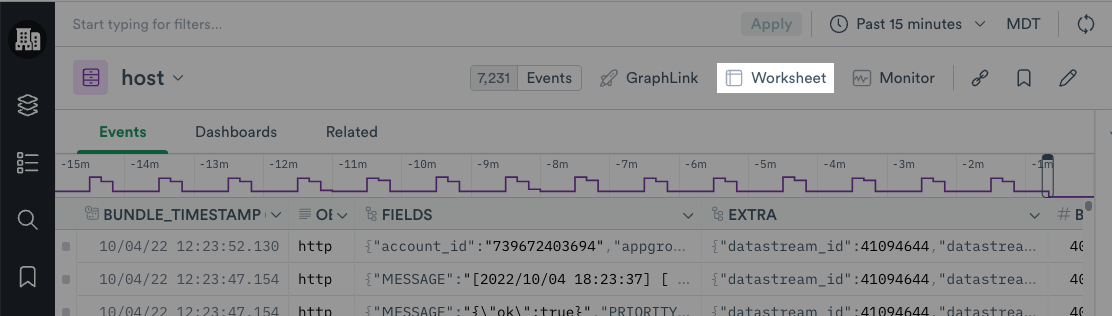

Click Worksheet to open it in a new worksheet.

Figure 2 - Opening a worksheet for a dataset.

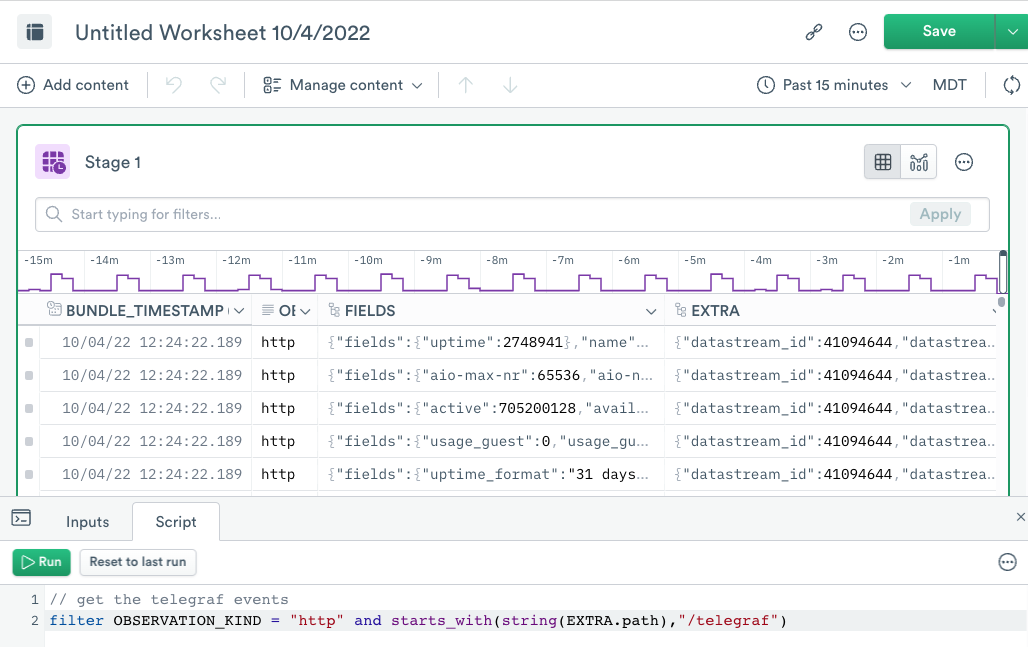

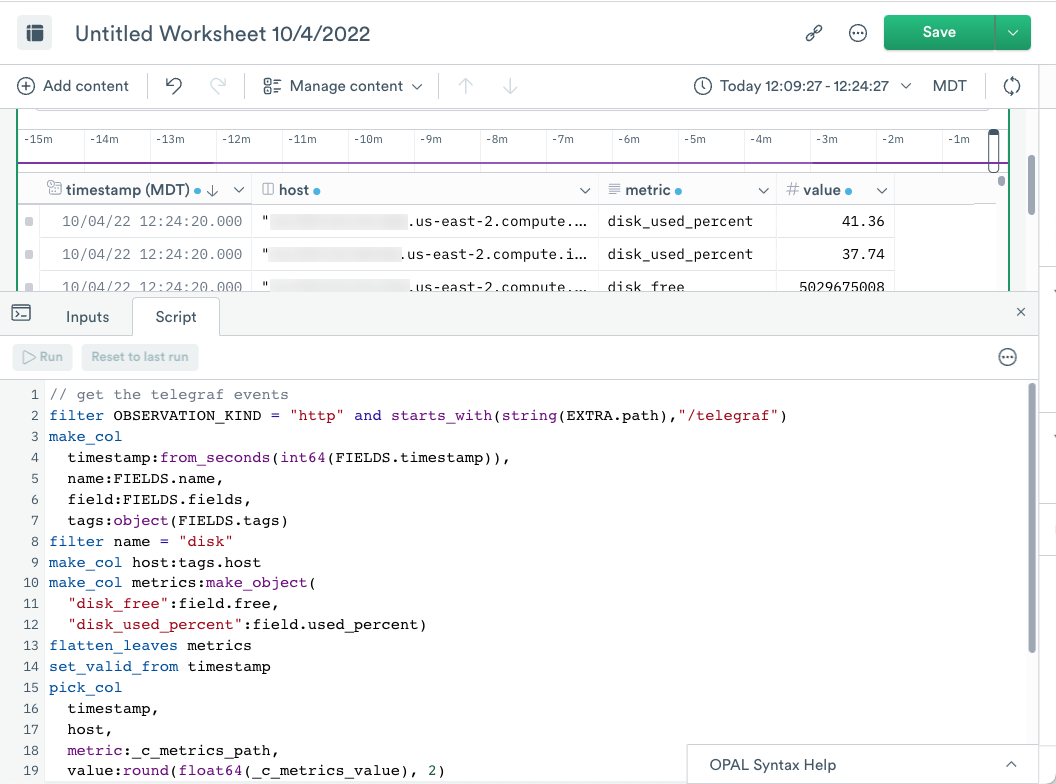

In the OPAL console, you want to add OPAL can

filterto only the Telegraf events.Telegraf data contains an

httptype ofOBSERVATION_KINDandEXTRA.pathvalue of/telegraf. To see only these events, filter for those strings in the respective fields:// get the telegraf events filter OBSERVATION_KIND = "http" and starts_with(string(EXTRA.path),"/telegraf")

Figure 3 - Editing in the OPAL console.

Extract fields containing metric details with

make_col:The

timestampfield contains the date and time of the observation.The

namefield describes a category of metrics, such asdiskorcpu.The

fieldsobject contains a JSON object with all the metrics for a category.The

tagsobject contains a JSON object with additional metadata, such as the hostname.

make_col timestamp:from_seconds(int64(FIELDS.timestamp)), name:FIELDS.name, field:FIELDS.fields, tags:object(FIELDS.tags)

The OPAL script converts the Timestamps into an integer with int64(), then from the time in seconds to a timestamp object with from_seconds(). And converts tags from the original JSON into an object with object() value.

Click Run to execute your OPAL script.

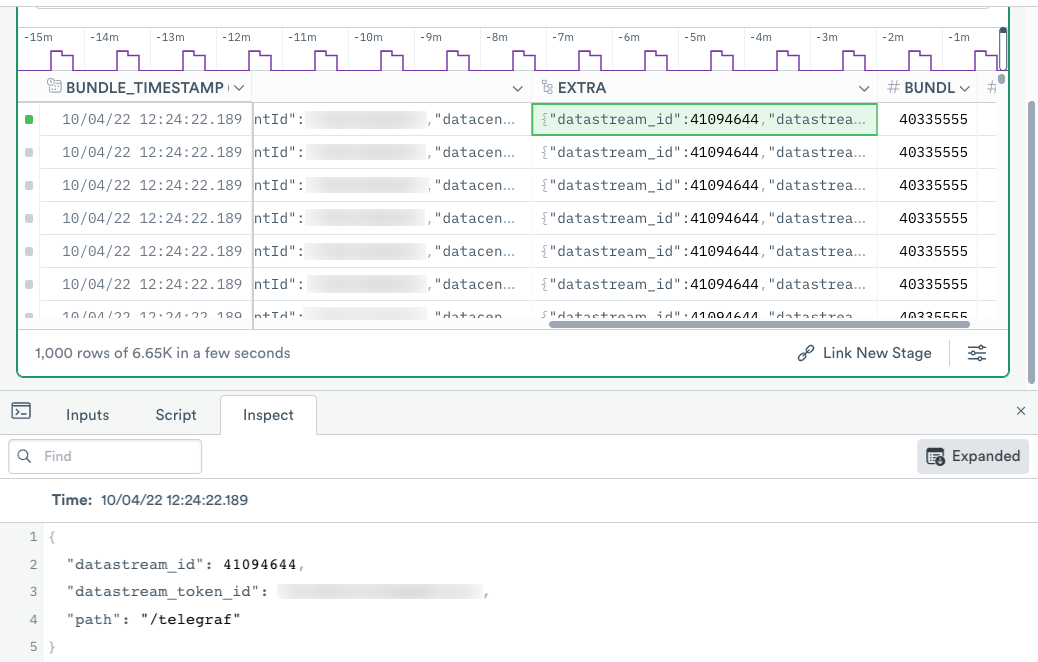

Scroll to the right and select a cell in the EXTRA column.

To view the entire contents, right-click and select the Inspect Raw Values. Now that you have filtered data, every EXTRA value contains

"path": "/telegraf", indicating these observations contain the Telegraf host metrics.

Figure 4 - Inspecting a cell value.

Expand to copy these OPAL statements

// Filter to only the Telegraf events filter OBSERVATION_KIND = "http" and starts_with(string(EXTRA.path),"/telegraf") // Extract fields make_col timestamp:from_seconds(int64(FIELDS.timestamp)), name:FIELDS.name, field:FIELDS.fields, tags:object(FIELDS.tags)

Note

Sometimes, you may want to save or publish, these events as a separate dataset. This allows you to use them for future operations without repeating the same filtering steps. For this tutorial, continue in the same worksheet and publish it later.

Modeling Your Metrics¶

This Telegraf data contains many different kinds of metrics. To start, model two disk metrics, free space and percent used. The original JSON object calls these free and used_percent.

{

"free": 21536493568,

"inodes_free": 2247789,

"inodes_total": 2611200,

"inodes_used": 363411,

"total": 42091868160,

"used": 18615189504,

"used_percent": 46.36216487019794

}

To model these two metrics, use these steps:

Filterto see only thediskmetrics. In the same category, find two metrics of interest with thename“disk”.filter name = "disk"

Extract the hostname from

tagsinto a new field. This allows you to associate each metric with the originating host.make_col host:tags.host

The

tagsfield also contains other metadata, such as the datacenter location of the host and the file system type. For now, track only the hostname.Use

make_objectto create an object with the desired metric data as key/value pairs in a new field namedmetrics. This intermediate step collects the data for both metrics in one place. It also allows you to label them with more useful names.make_col metrics:make_object( "disk_free":field.free, "disk_used_percent":field.used_percent)

Convert to a metric format with

flatten_leaves. This verb creates a new event from each key/value pair in the object and contains one metric per event for the correct form. Rename the automatically generated fields in a subsequent step.flatten_leaves metrics

Set the timestamp to the one provided in the data.

set_valid_fromcontains a metadata operation that designates a field as the desired timestamp. By default, Observe assigns timestamps based on the ingest time. This verb allows you to use the one provided by the original source.set_valid_from timestamp

With these steps completed, keep only the fields you need.

pick_coldrops anything not specified from the dataset schema. However, you must always have a timestamp field.pick_colcan rename or modify the contents of a field at the same time. In this case, rename the generated_c_metrics_pathand_c_metrics_valuefields tometricandvalue. This naming convention places metric names in ametricfield and metric values in avaluefield, allowing Observe to discover individual metrics in a metrics dataset automatically.Since metric values must be floating point values, use

float64(), andround()to convert the values to an easier to read form.

pick_col

timestamp,

host,

metric:_c_metrics_path,

value:round(float64(_c_metrics_value), 2)

Figure 5 - Metric format with one row per metric.

7. Register this dataset as a metrics dataset. Use interface so Observe can recognize this dataset as containing metrics in the expected form. Since this dataset contains the expected metric and value fields, Observe automatically discovers metrics in the dataset.

interface "metric"

Expand to copy these OPAL statements

filter name = "disk"

make_col host:tags.host

make_col metrics:make_object(

"disk_free":field.free,

"disk_used_percent":field.used_percent)

flatten_leaves metrics

set_valid_from timestamp

pick_col

timestamp,

host,

metric:_c_metrics_path,

value:round(float64(_c_metrics_value), 2)

interface "metric"

Expand to copy the complete OPAL script

// Filter to only the Telegraf events

filter OBSERVATION_KIND = "http" and starts_with(string(EXTRA.path),"/telegraf")

// Extract fields

make_col timestamp:from_seconds(int64(FIELDS.timestamp)),

name:FIELDS.name,

field:FIELDS.fields,

tags:object(FIELDS.tags)

filter name = "disk"

make_col host:tags.host

make_col metrics:make_object(

"disk_free":field.free,

"disk_used_percent":field.used_percent)

flatten_leaves metrics

set_valid_from options(max_time_diff:2m), timestamp

pick_col

timestamp,

host,

metric:_c_metrics_path,

value:round(float64(_c_metrics_value), 2)

interface "metric"

Creating a Metric Dataset¶

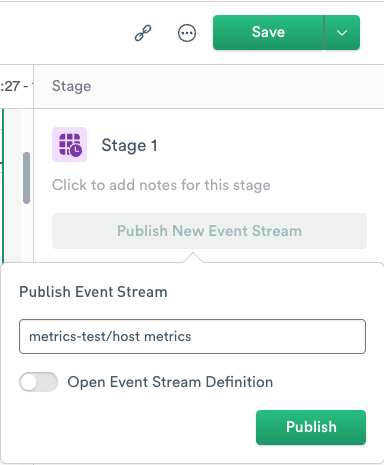

After you complete the metrics shaping, publish this dataset so its metrics can be used outside this worksheet.

In the right menu, click Publish New Event Stream and add a dataset name. You can name it metrics-test/host metrics to create it in a package named metrics-test. If your right menu displays details about your data instead of Publish New Event Stream, click the X to deselect any columns or cells in the data table.

Figure 6 - Publishing a new event stream.

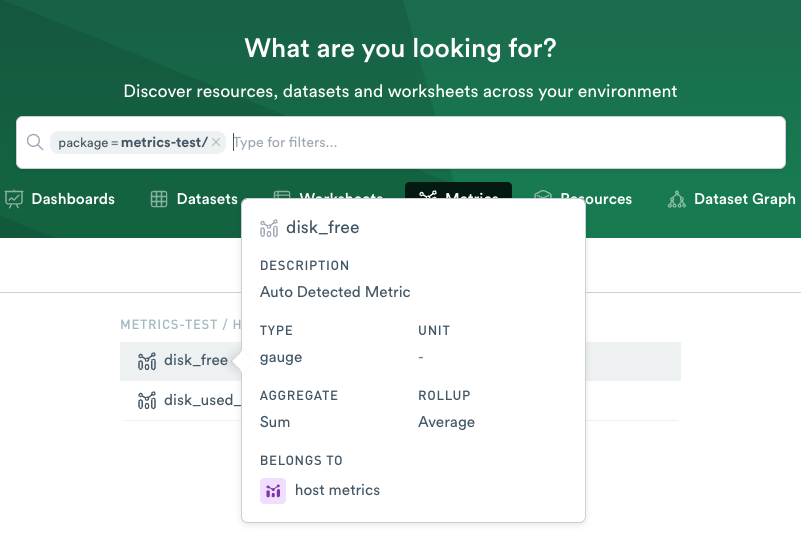

Viewing the Modeled Metrics¶

When you publish a new metrics dataset, Observe automatically identifies any metrics it contains. To see details of an individual metric, hover over the name:

Figure 7 - Inspecting the disk_free metric in the Explore tab.

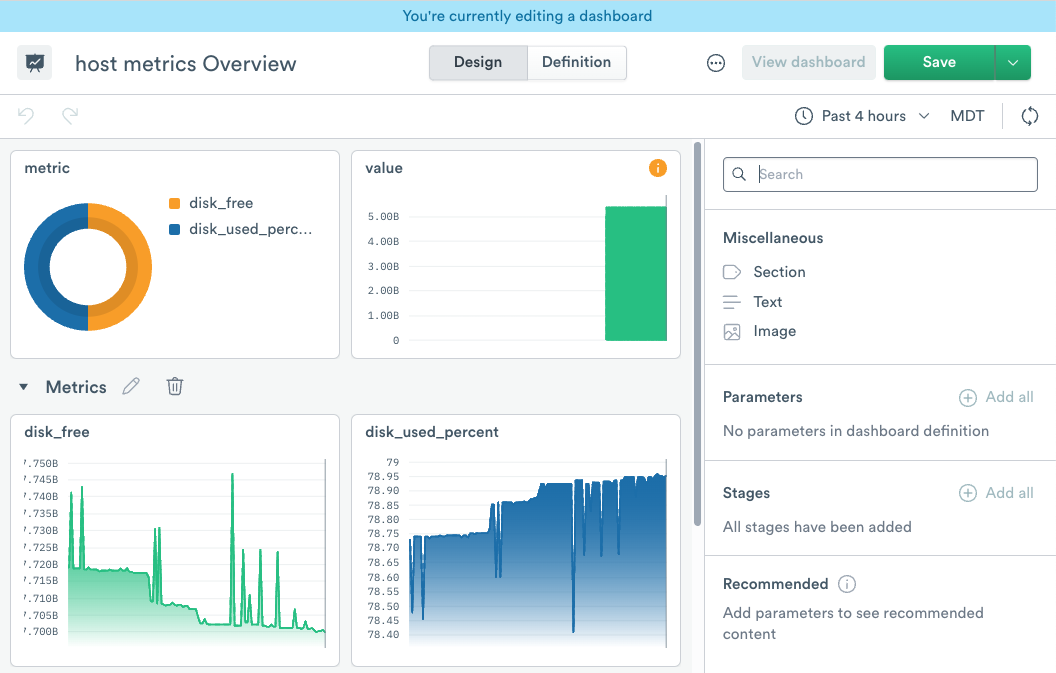

For additional visualization options, create a dashboard for the metrics in this dataset:

From the Explore tab, click Dashboards to view a list of existing dashboards.

Click New Dashboard and then Start from a dataset.

In the Dataset Search field, search for the dataset you just created, host metrics.

Select the dataset to create an Overview dashboard for this dataset.

Figure 8 - Editing the default Overview dashboard.

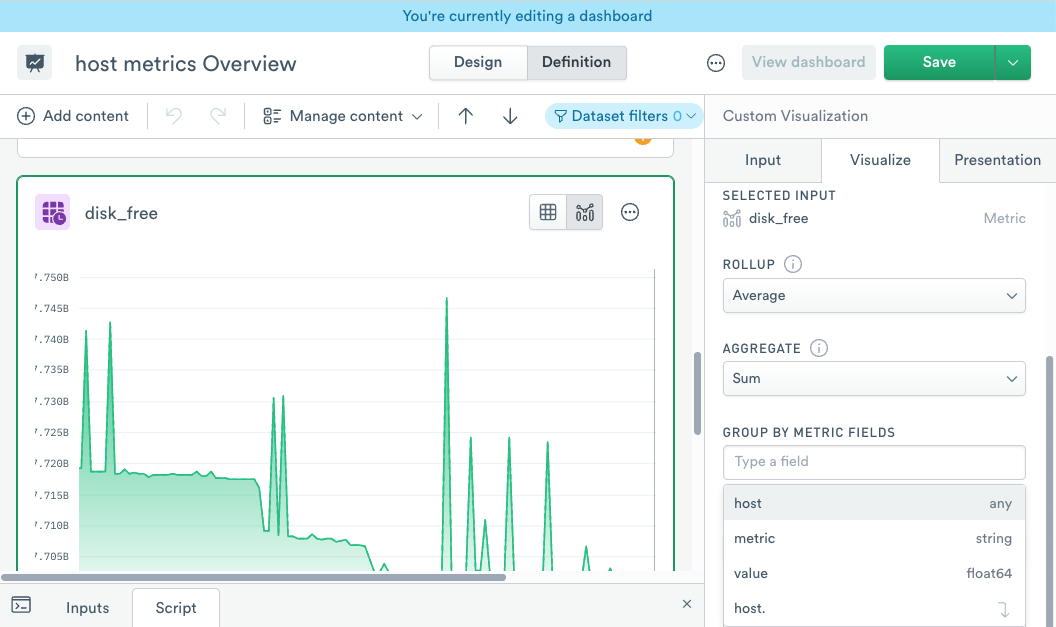

Observe automatically populates dashboard cards based on the dataset definition. For this example, the dashboard includes cards for the disk_free and disk_used_percent metrics, but only one value for all hosts sending data. To show the values by host, edit the dashboard definition to change the Group by field:

From the Dashboard view, click Definition.

Select the metric card you want to modify, for example, disk_free.

In the right menu, select the Visualize tab.

By default, the Group By Metric Fields section has no entry. Click to add host.

Click Apply to save your changes.

The disk_free card now shows the metric values for each host.

Figure 9 - Updating the Group By field for a card.

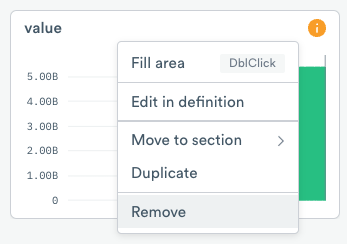

Change the disk_used_percent the same way. You may also update the similar Group By field for the metric and value cards. Click Design to return to the Design view and see the revised cards. You may move or delete cards if you like, for example, the “value” card:

Figure 10 - Removing a card from a dashboard.

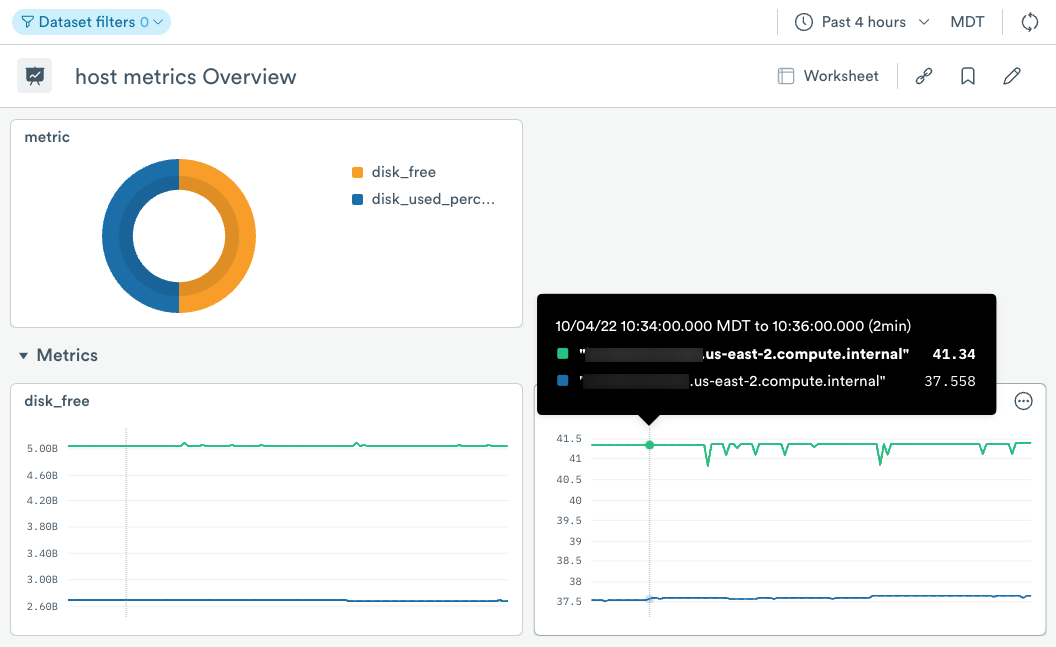

When you have finished, click Save to save your dashboard changes.

To view the updated dashboard, click View dashboard.

Figure 11 - Updated dashboard.