AWS-at-scale data ingestion¶

This document describes how you can get your AWS data into Observe from an AWS-at-scale environment, with multiple accounts across multiple regions, resulting in potentially hundreds of separate account-region combinations.

AWS-at-scale data ingestion architecture¶

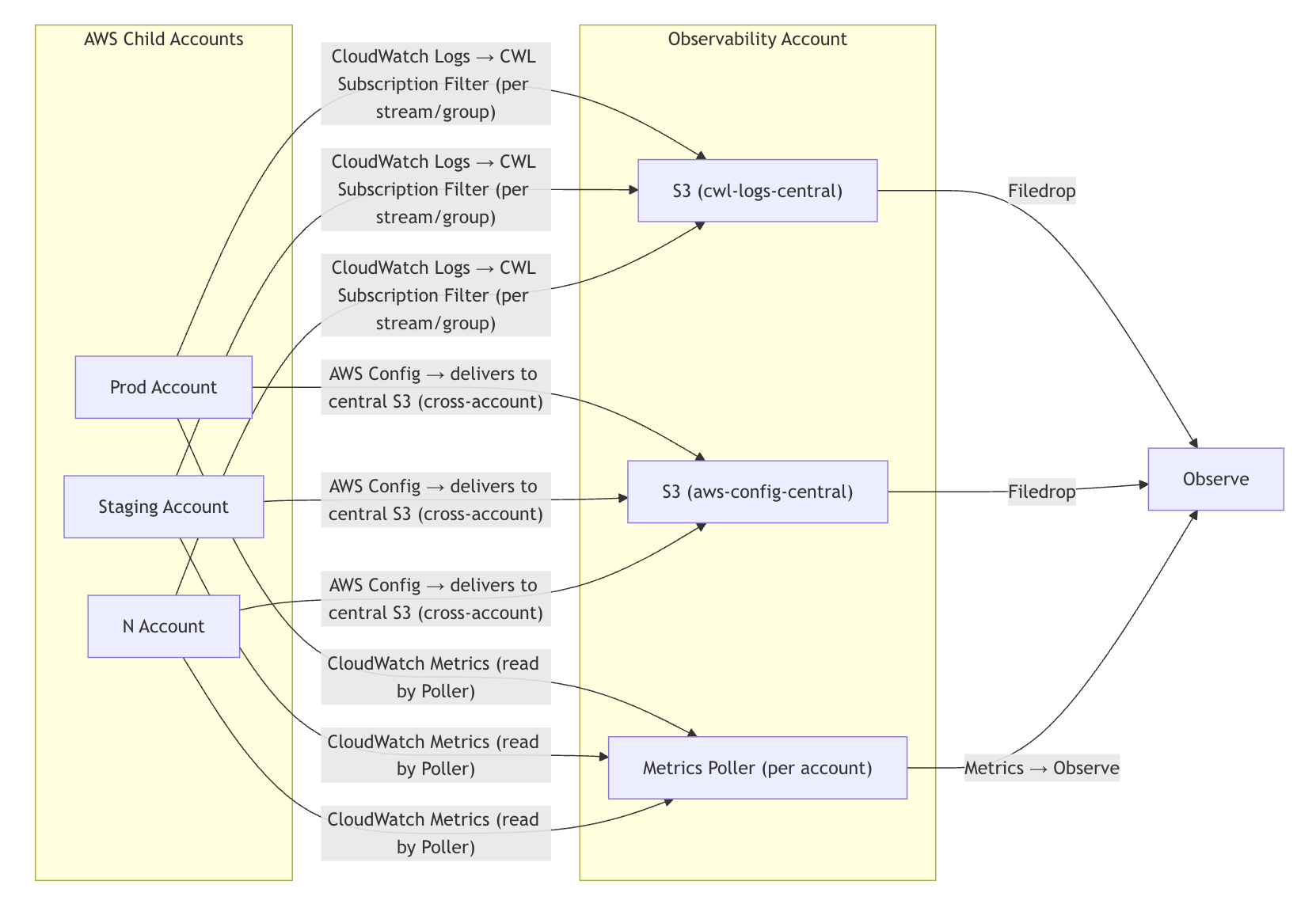

Such a solution requires having all of your AWS child account write to a single observability account:

Write your CloudWatch logs and AWS configurations in one Observability account that holds the central S3 buckets. Then, use CloudFormation or Terraform to push from those central buckets to Observe via Filedrop.

Use Terraform to pull CloudWatch metrics via the Observe poller. Use one poller for each AWS account.

For example:

Terraform and CloudFormation resources¶

View the Terraform and CloudFormation resources you will need to set up this data ingestion:

Terraform

CloudWatch Logs, AWS Config: https://registry.terraform.io/modules/observeinc/collection/aws/latest/submodules/stack

CloudWatch Metrics: Poller https://registry.terraform.io/providers/observeinc/observe/latest/docs/resources/poller#cloudwatch-metrics-poller

CloudFormation

Get CloudWatch logs into Observe¶

Consolidate your CloudWatch logs to land in one S3 bucket in an AWS Observability account. Observe can ingest the logs from that account using the Observe Forwarder, deployed in the same AWS account.

Perform the following tasks to set this up:

Create a KMS-encrypted S3 bucket, such as

cwl-logs-central-<org>, with the desired lifecycle rules, such as 30–90 days hot with Glacier or Glacier Deep Archive storage classes. Be sure to check whether any KMS key policies in the Observability account are denying the S3 bucket objects from being copied when files are sent to our Filedrop.In each source account, connect your CloudWatch log subscription filters to a Kinesis Firehose (same account) that delivers to the central S3 bucket (cross-account role).

In the Observability account, deploy Add Data for AWS (CloudFormation) or Terraform stack module to read from

cwl-logs-central-*and send to Filedrop.

Note that Filedrop prefers larger compressed objects rather than many small files:

Target up to 1 GB per file for optimal ingest.

Each single cell or field inside the file must be at most 16 MB.

Use compression, such as GZIP, to minimize PUT operations and reduce costs.

Balance Firehose buffering (size vs. interval) so you generate fewer, larger objects without delaying data too much.

Get AWS configurations into Observe¶

Get all your AWS accounts to send their configuration snapshots and change notifications to a single S3 bucket in the Observability account.

Perform the following tasks to set this up:

Enable the AWS configuration in each account or region with delivery channel to

aws-config-central-<org>using a cross-account bucket policy. Make sure both the bucket policy and the role trust in source accounts are set; it’s common to do one and forget the other.In the Observability account, deploy Add Data for AWS (CloudFormation/Terraform) to pick up from that bucket and send to Filedrop.

Get CloudWatch metrics into Observe¶

Configure a lightweight metrics poller in each AWS account to read the CloudWatch metrics and ship the data to Observe.

Perform the following tasks to set this up:

With Terraform, deploy the poller module once per account with least-privilege IAM, read-only CloudWatch permissions.

Scope namespaces, such as

AWS/EC2,AWS/ELB,AWS/Lambda, or custom, to control cost and volume. Be careful using namespaces with many dimensions, as this can cause costs to spike quickly.Centralize config via Terraform variables and tag resources. For example:

Owner=Observability,DataClass=Metrics.

Can I use Control Tower?¶

This section contains additional information and context to help you decide if you want to use Control Tower to setup a central Observability account.

How does Control Tower fit into this picture?¶

Control Tower sets up a multi-account AWS organization with guardrails, centralized governance, and predefined “landing zone” accounts. Since Control Tower already creates one central account for storing logs and config, it is possible to extend the Control Tower Log Archive account to also serve as the central Observability account.

Even if you have Control Tower, you may still want to create a separate dedicated Observability account in the following cases:

You don’t want to overload the log archive with additional ingestion pipelines.

You want to clearly separate compliance logs (retention-focused) vs. observability telemetry (operational, shorter retention, more frequent query).

In either case, the central S3 buckets for CloudWatch logs exports and AWS config snapshots reside in one chosen account, and cross-account delivery is handled by bucket policies and roles.

Does Control Tower include all CloudWatch logs from all AWS accounts?¶

The Control Tower Log Archive account is not a full CloudWatch logs aggregator.

The Log Archive account always collects CloudTrail and optionally Config / VPC Flow Logs, but it does not automatically include all CloudWatch Logs from member accounts. If you want that, you need to set up subscriptions/export pipelines yourself.

What Control Tower Log Archive does by default¶

When you set up Control Tower, a Log Archive account is created and Organization-level CloudTrail is configured.

All member accounts in the organization send their CloudTrail logs (and optionally VPC Flow Logs, Config snapshots, etc.) into the central S3 bucket in the Log Archive account.

This is focused on compliance and governance logs (security + audit trail).

What Control Tower doesn’t include by default¶

Control Tower does not automatically centralize all CloudWatch Logs log groups, such as Lambda logs, ECS logs, and application logs.

To get those into the Log Archive account, you must configure either of the following:

Log subscriptions, such as CloudWatch Logs → Kinesis Firehose → central S3 in Log Archive.

Centralized logging solutions, such as AWS Centralized Logging solution, or custom Terraform/CloudFormation.

Control Tower doesn’t know which CloudWatch log groups you care about, so you have to set up forwarding yourself.

How AWS config fits¶

Control Tower can enable AWS Config in governed accounts and deliver Config snapshots and compliance records into the Log Archive account’s S3 bucket.

This is separate from CloudWatch Logs, only Config data is included.

Pros and cons to consider¶

This section compares using a Control Tower Log Archive account versus creating a dedicated Observability account for centralizing logs, metrics, and config.

Control Tower Log Archive account¶

Review the following considerations for using the Control Tower Log Archive account:

Pros

Out of the box: Created automatically with Control Tower landing zone. Already has baseline setup for CloudTrail and config delivery.

Governance-aligned: Fits neatly into AWS Organizations structure; integrates with Service Control Policies (SCPs) and guardrails.

Cost efficiency: One less account to manage; no extra billing line item.

Security model already in place: Enforced least-privilege, encryption, and lifecycle policies aligned with compliance needs.

Single pane for compliance data: Audit/security teams can find CloudTrail and configs in one place.

Cons

Scope mismatch: Designed for compliance/audit logs, not necessarily high-volume app telemetry.

Retention policies: Typically long (7+ years for audit); may conflict with operational observability needs where 30–90 days is common.

Ownership conflict: Often governed by compliance/security teams, not platform/observability teams → friction around change requests.

Cost tracking: Mixing compliance and observability data in the same S3 buckets can blur cost attribution.

Dedicated Observability account¶

Review the following considerations for create your own dedicated Observability account:

Pros

Tailored for telemetry: You can optimize retention, compression, partitioning, and ingestion frequency for ops data (different from compliance requirements).

Operational autonomy: Owned by platform/SRE/DevOps teams; changes (Firehose configs, Filedrop integration) don’t require sign-off from audit.

Cost isolation: Easy to attribute ingest/storage costs for observability separately from compliance.

Flexibility: Can host additional observability tooling (metrics pollers, log processing Lambdas, dashboards) without interfering with governance controls.

Future-proof: If you expand to multiple observability vendors or run custom pipelines, the account is already dedicated.

Cons

Extra account overhead: Another AWS account to provision, secure, and manage.

Duplication risk: You may end up storing the same data twice. For example, config snapshots can be stored in the Log Archive and Observability account.

Integration effort: Need to configure cross-account delivery and policies from scratch, whereas such policies for Log Archive are already wired in by Control Tower.

Silo danger: If governance teams aren’t looped in, may create fragmented views of org-wide logs.

Recommendations¶

Review the following recommendations based on your organization’s size and priorities:

You are a small and/or compliance-driven org. Stick with Control Tower’s Log Archive account, and extend it slightly to add S3 buckets and Firehose targets for CloudWatch logs and config if you’re OK with compliance retention rules and shared ownership.

You are a larger and/or platform-driven org. Stand up a dedicated Observability account for telemetry pipelines for logs, metrics, and config, while still letting Control Tower’s Log Archive account focus on compliance. This separation avoids operational vs. compliance conflicts.

You’re a little of both:

Keep CloudTrail and long-term config snapshots in Control Tower’s Log Archive.

Ship CloudWatch logs and shorter retention, higher volume metrics into an Observability account.