Troubleshoot slow databases / n+1 issues¶

Here’s how you can use Observe APM to detect n+1 issues and other types of database slowness in your distributed service.

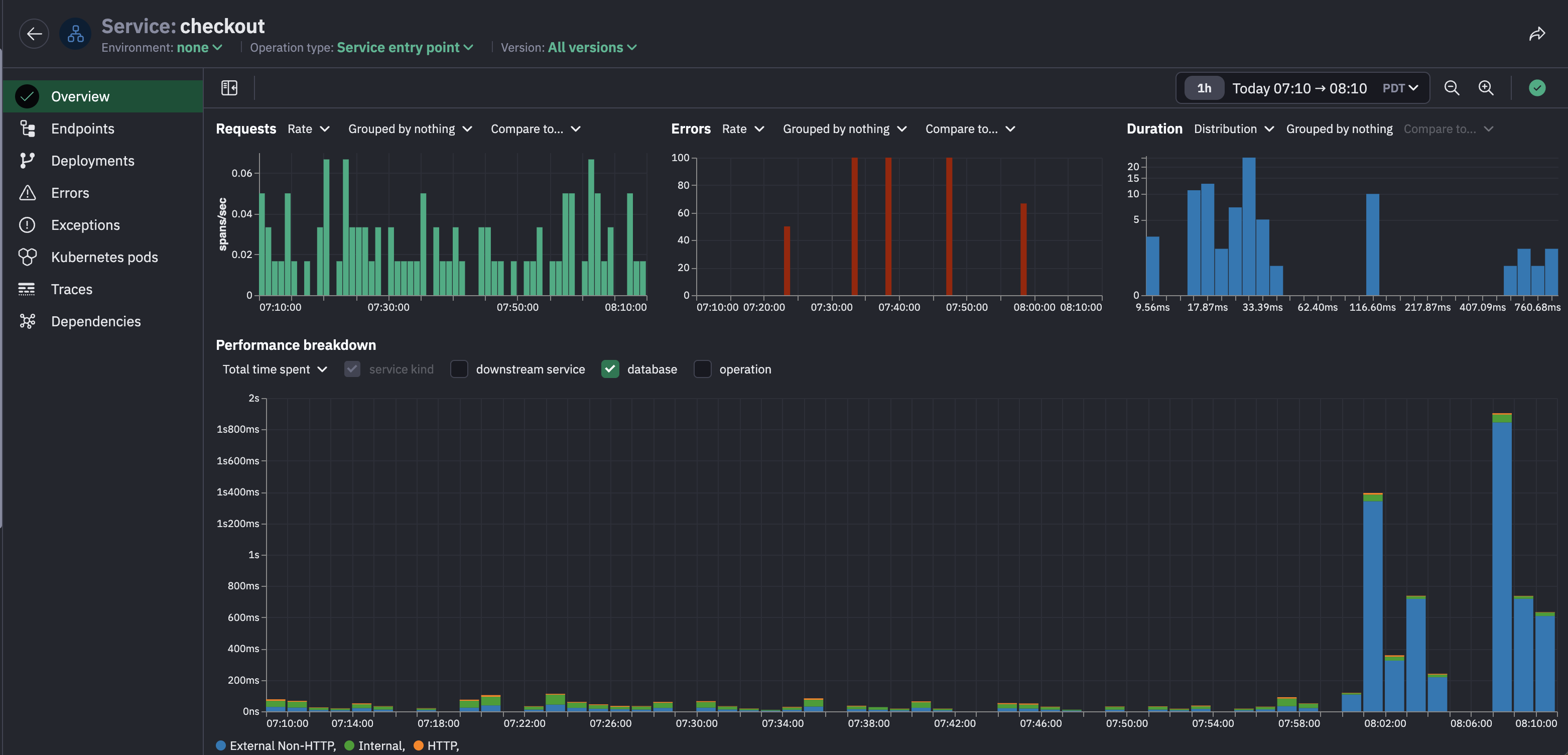

Let’s say you’re an on-call developer, DevOps engineer, or SRE that’s responsible for the e-commerce site & you’ve received a report of abnormal latency in the checkout service. Inspect the checkout service in Observe APM and see from the latency distribution that there are some outliers, and from the performance breakdown chart that there’s a major spike in latency starting a few minutes ago.

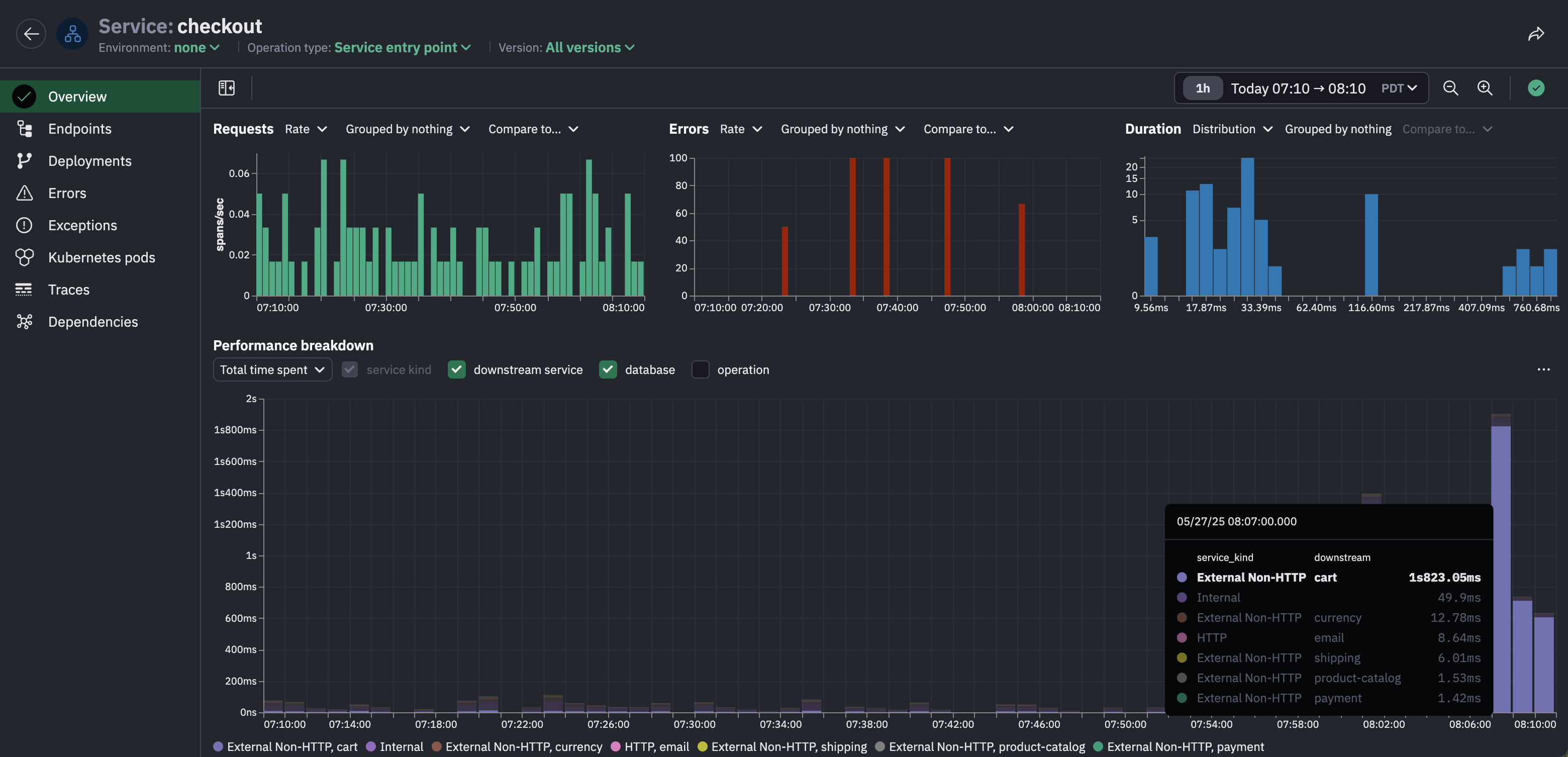

View the performance breakdown by downstream service to see that most of the time spent in the checkout service is actually waiting for the cart service to return a response.

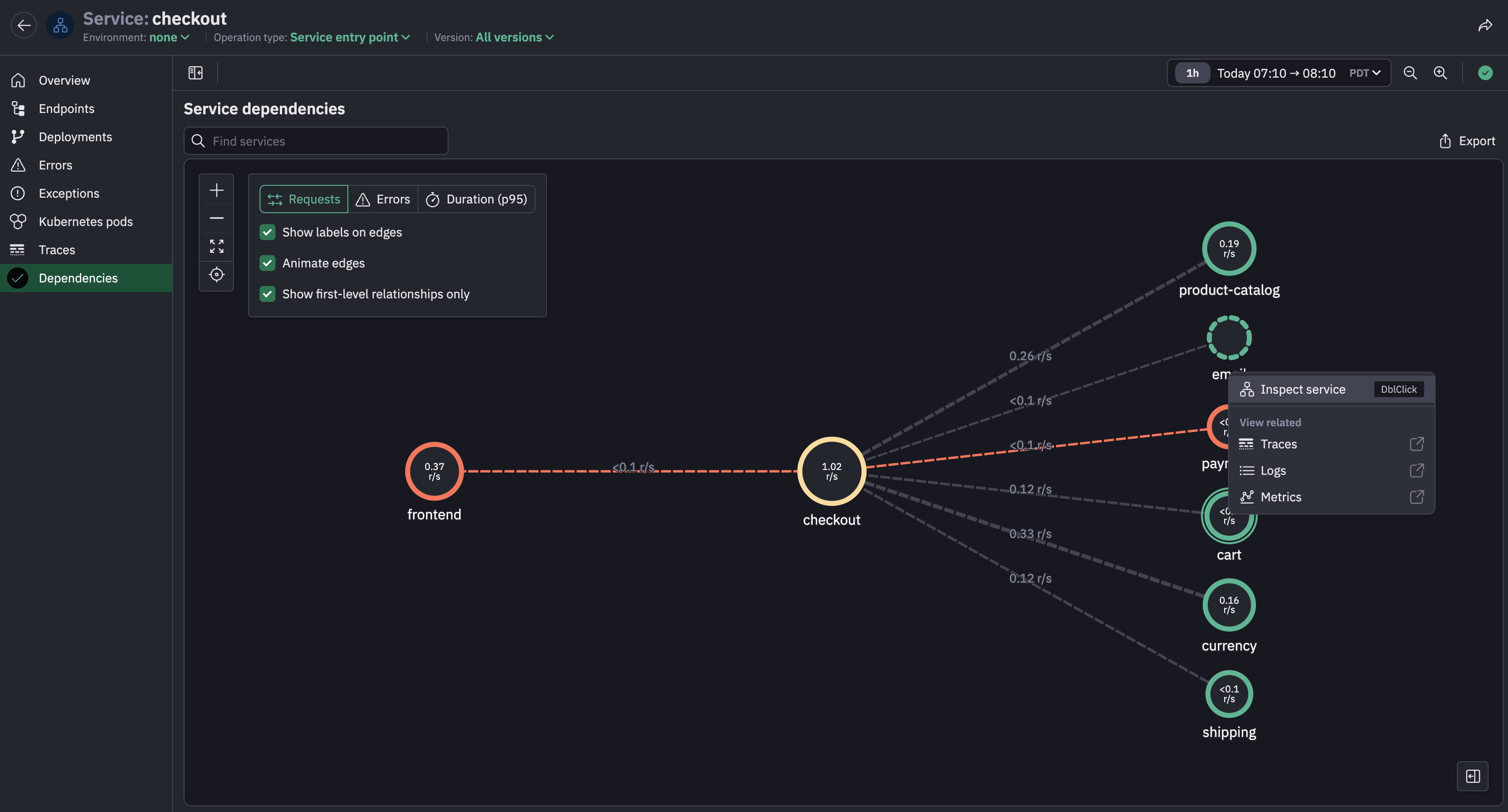

Navigate to the downstream cart service from the service map for the checkout service.

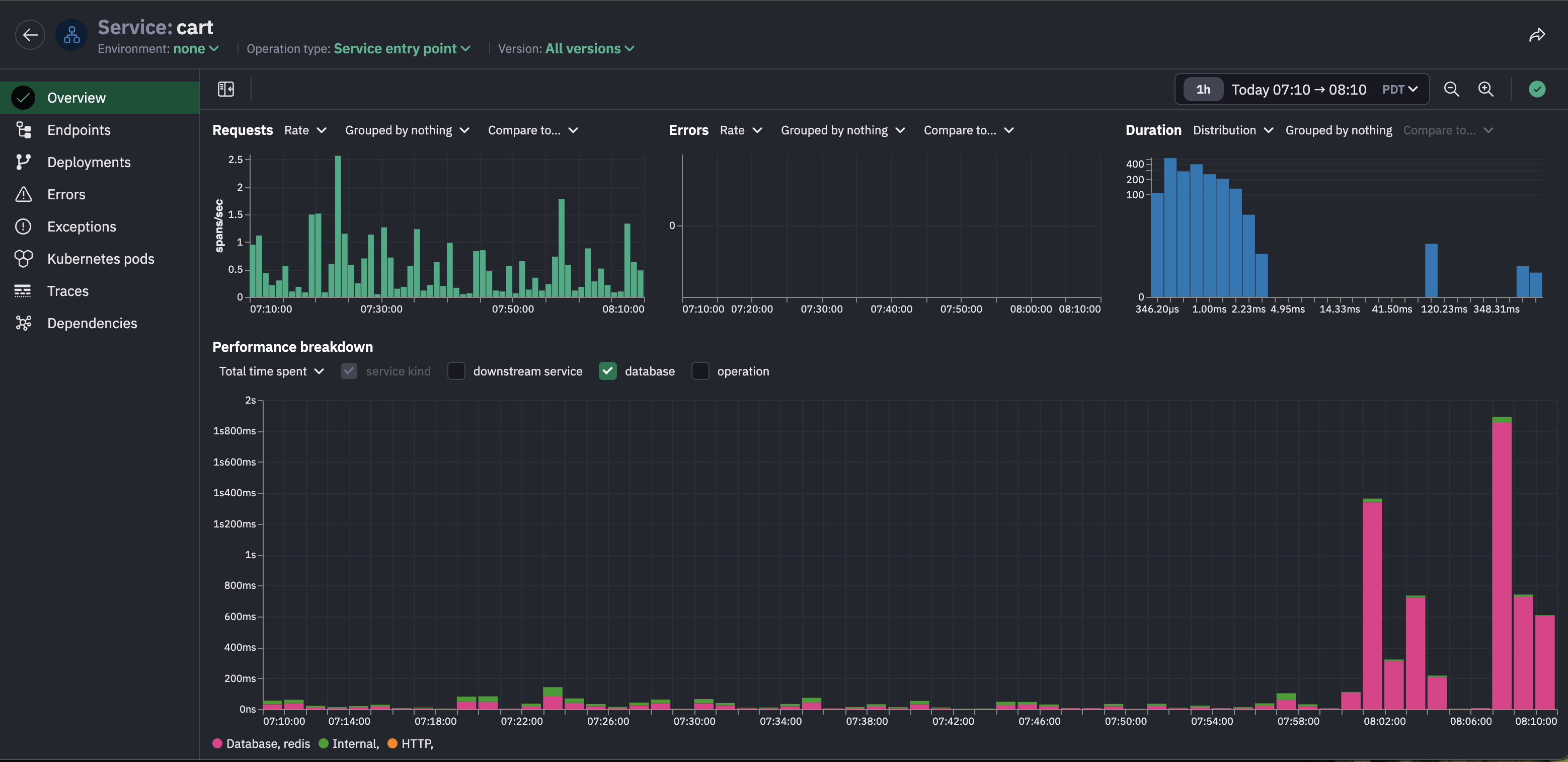

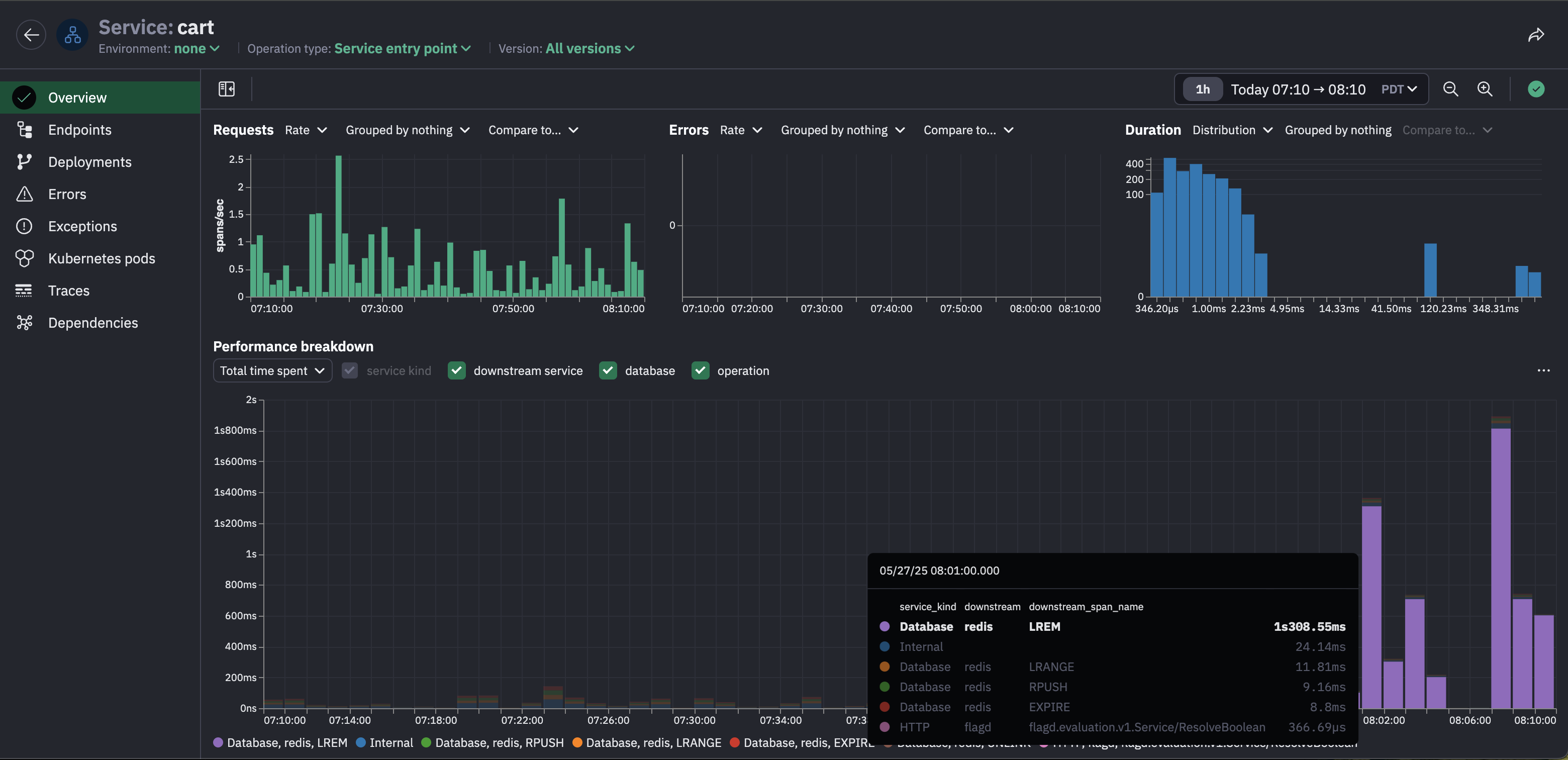

The cart service is also experiencing a latency spike in the last few minutes.

View the performance breakdown by operation to see that most of the time spent in the cart service is LREM operations in Redis.

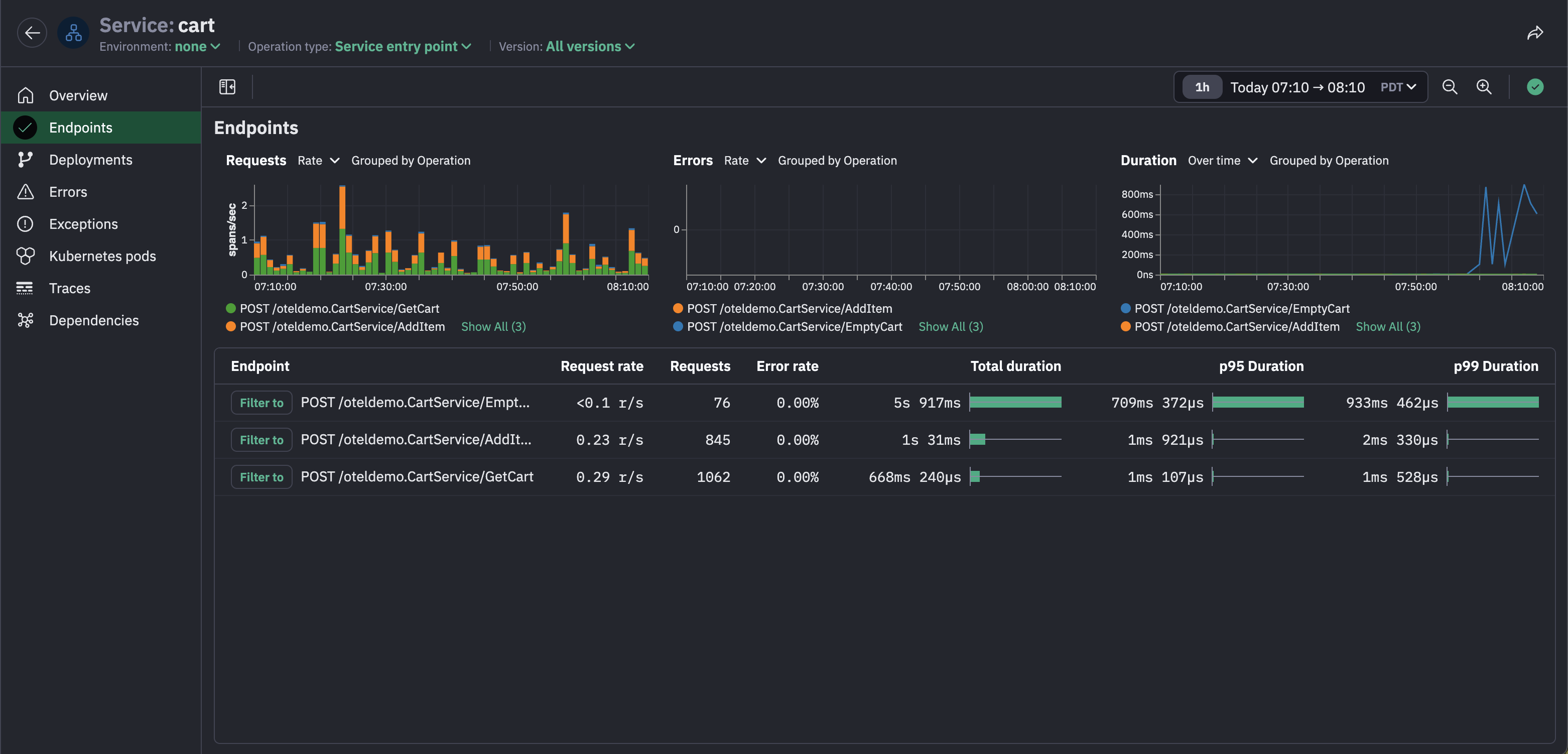

Narrow down the degradation further to a single endpoint, namely the `EmptyCart` endpoint in the cart service, which had a spike in latency in the last few minutes, unlike the other two endpoints in the cart service whose latency remains stable.

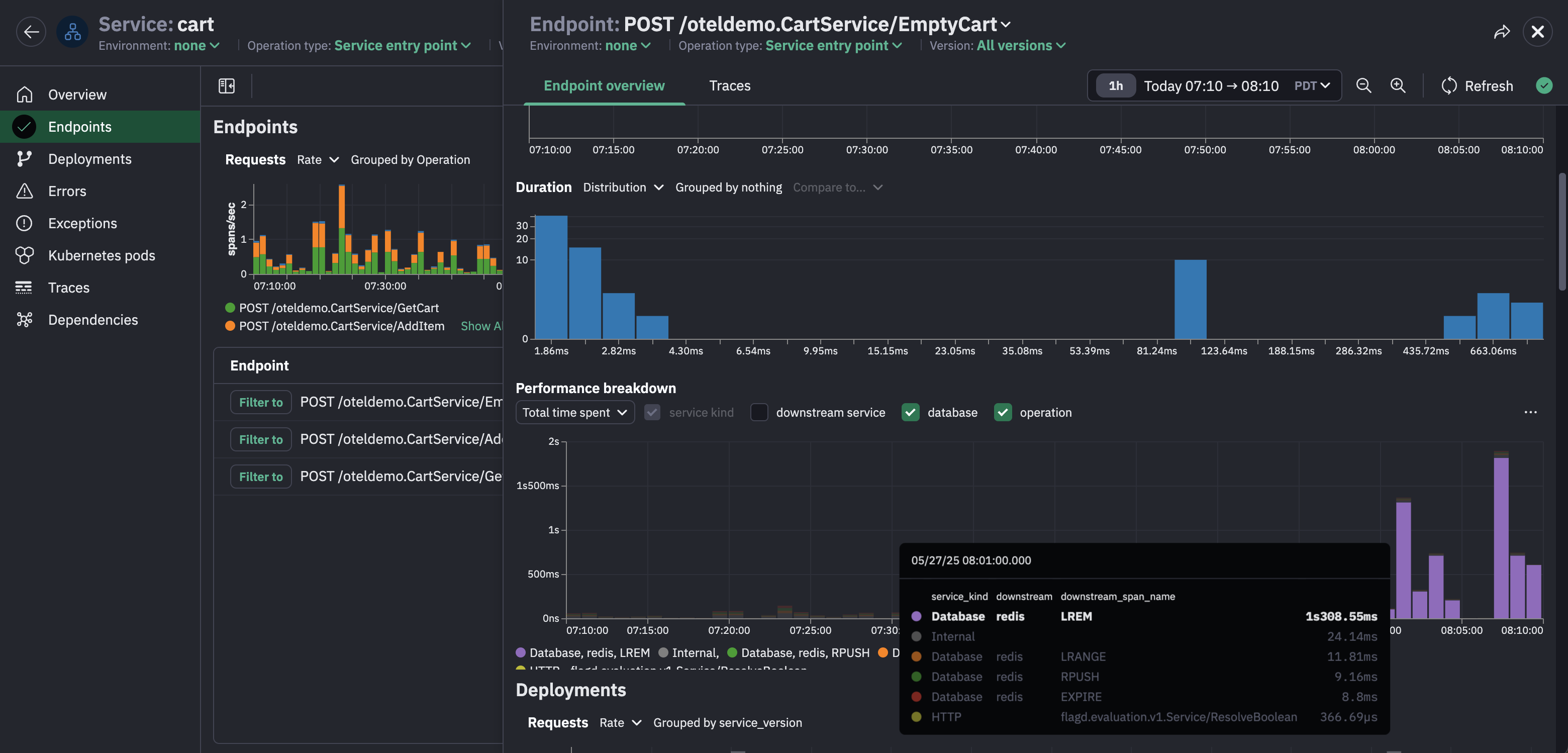

The performance breakdown of the `EmptyCart` endpoint reveals that the latency spike can be explained by LREM operations in Redis, which means we've narrowed down the issue to just this endpoint.

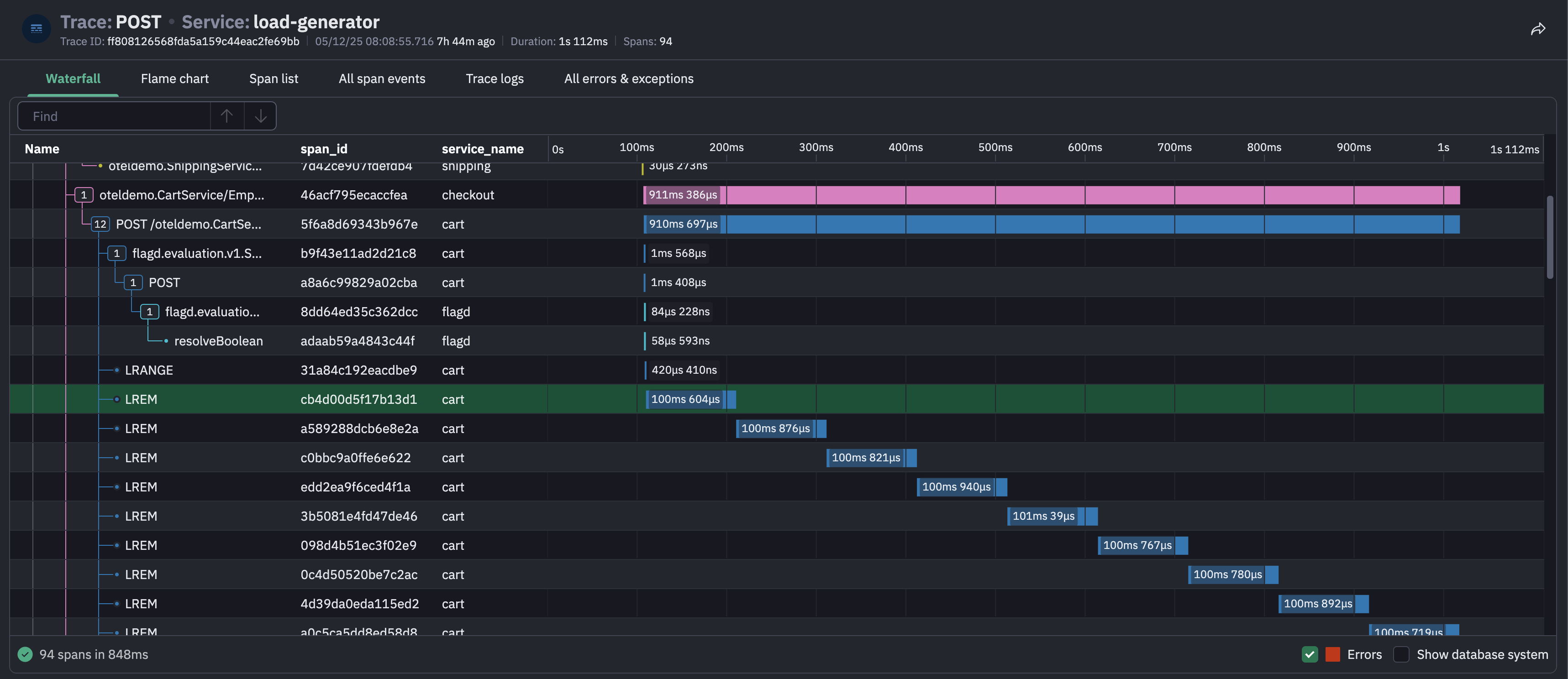

It's time to look at slow traces for the `EmptyCart` endpoint. The traces tab in the endpoint inspector is automatically filtered to the slowest traces.

The waterfall for one of these traces shows a lot of LREM calls, a telltale sign of an n+1 issue.

Inspect the attributes on these LREM calls to see the specific queries being issued:

Now we have enough information to conclude what caused the latency spike in the checkout service: when users empty their cart, the frontend calls the checkout service, which calls the `EmptyCart` endpoint in the cart service, which runs a database query for each individual cart item, a classic n+1 scenario. The fix is simple: update the logic in this endpoint so it removes all the cart items in a single query.