Welcome to Observe¶

This page will help you get started with Observe. You’ll be up and running in a jiffy!

What is Observe?¶

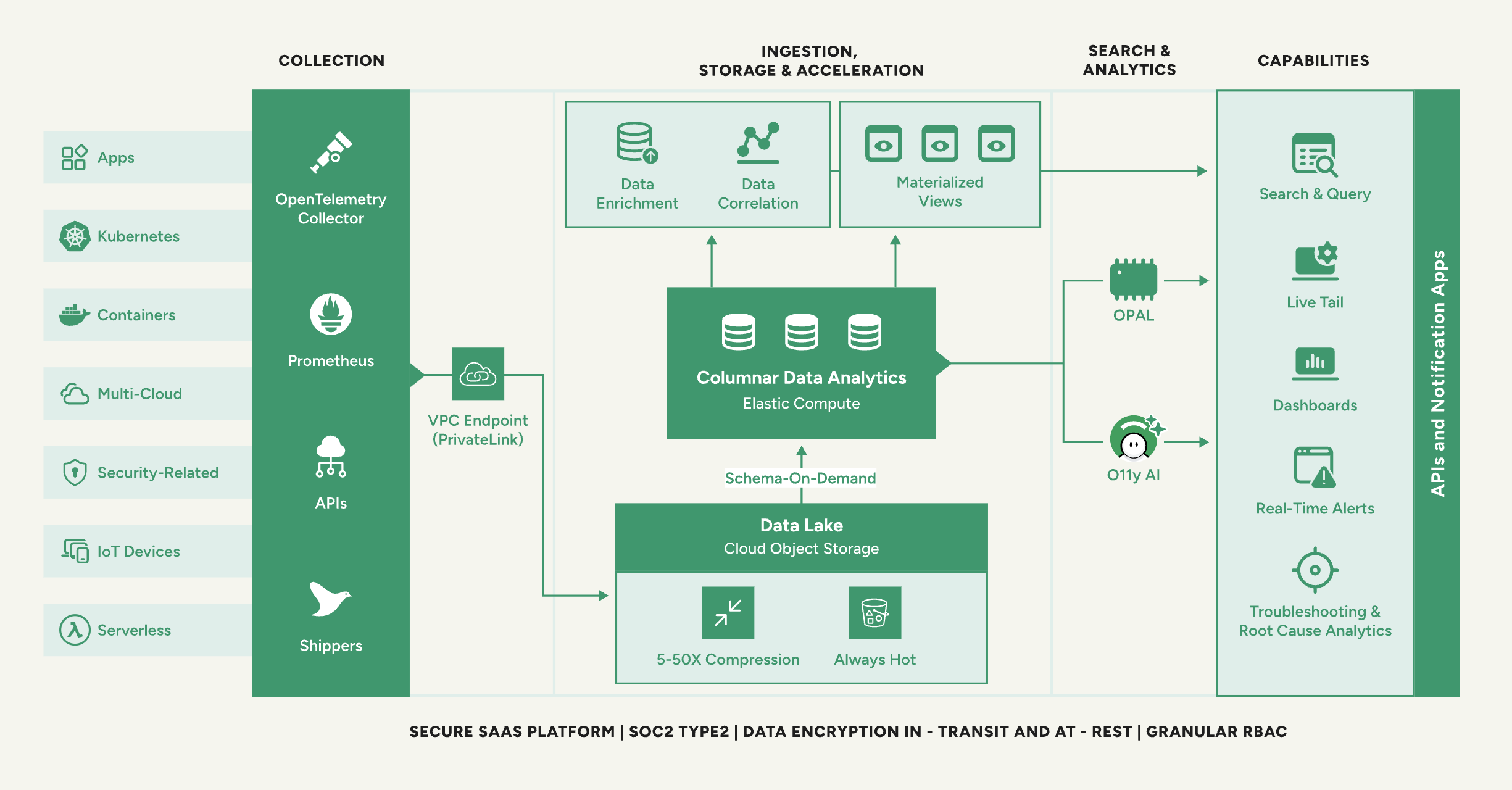

Observe delivers AI-powered observability at scale, enabling engineering teams to ingest hundreds of terabytes daily and troubleshoot 3x faster at 30% the cost.

How does it work?¶

To use Observe, you must collect data from your infrastructure, send the data to Observe,

Install the Observe Agent to collect the data.

Get the data in to Observe.

Use the search and analytics capabilities of Observe to create dashboards and configure Monitors.

Use your dashboards and Monitors to investigate and troubleshoot issues.

What does it do?¶

Observe provides the following visibility into your application and infrastructure data:

Log management

Set up log management

Ingest your structured, semi-structured, or unstructured logs from any source. Explore your log data using Query Builder or OPAL, a feature-rich query language to explore and transform data. Use O11y Copilot to generate OPAL.

Trouleshoot issuesUse Live Mode for detecting and troubleshooting issues in real-time. Correlate logs from any source using correlation tags and pre-built relationships. Pivot to logs from dashboards or Trace Explorer for faster root cause analysis and troubleshooting.

Log-derived metricsDerive custom metrics based on specific log attributes, patterns, or content that are relevant to your business objectives. Extract any string from the log message using point-and-click UI and O11y AI Regex for faster analysis.

ComplianceAchieve compliance readiness by keeping logs for as long as needed. Access historical data on demand to satisfy compliance requirements.

Application performance monioring (APM)

OpenTelemetry native

Adopt open standard-based instrumentation and collection of telemetry data with OpenTelemetry. Automatically instrument your application code using OTel libraries or use OTel SDKs and APIs to instrument code.

Service discovery and mapping

Automatically discover all microservices and databases from OpenTelemetry data. Visualize your entire system architecture in one place. Give every developer access to Golden Signals monitoring without per-seat pricing that forces you to limit tool access to just a few experts.

Trace and search inspection

Analyze every slow transaction with 13 months of unsampled trace data. Pivot seamlessly from traces to logs, metrics, and infrastructure data. Drill down from service-level alerts to individual traces and correlated logs in seconds.

Deployment tracking

Instantly correlate deployment markers with performance changes to quickly identify whether new releases introduced regressions. Compare RED metrics across concurrent deployment versions. Automatically surface new error types introduced by deployments so your entire engineering team can identify issues without relying on expert troubleshooters.

Infrastructure monitoring

Any stack and scale

Easily get data in with 400+ pre-built integrations into infrastructure and popular technologies across cloud services, containers, Kubernetes, serverless functions, and more. Get out-of-the-box visualization and contextual alerts into health and performance of infrastructure services. Get historical performance data even on ephemeral infrastructure such as containers or pods.

High cardinality analyticsGet granular insights using tags and labels. Easily extract key metrics from logs using point-and-click UI. Never pay extra for log-derived metrics and control the spiraling costs of high-cardinality analytics.

Contextual pivotsUnderstand the interdependencies of complex cloud-native infrastructure and applications with automatically created correlations. Pivot to contextual logs from infrastructure dashboards to troubleshoot and resolve performance incidents. Get actionable alerts with in-context alerts to quickly detect and resolve issues as they arise.

Prometheus readyOutgrowing Prometheus? Eliminate the complexity of maintaining and scaling Prometheus with elastically scalable and cost efficient Observability solution. Use Observe’s native support for Prometheus using remote-write protocol. Stream Prometheus metrics in real-time to reduce MTTD and MTTR. Send metrics from multiple Prometheus instances to Observe for aggregation and analysis.

LLM observability

AI application tracking

Trace multi-step agentic workflows from user prompt to final response without sampling that could miss critical failure points in complex AI reasoning chains. Connect AI application performance to underlying infrastructure and services in one platform, eliminating manual correlation across separate monitoring tools. Retain complete LLM trace data with long-term storage so your entire team can investigate issues retrospectively without access restrictions.

Cost and token analytics

Monitor API spend across all LLM providers in real-time to prevent budget overruns and identify cost spikes as they happen. Analyze input and output token consumption by model, provider, and application feature to identify optimization opportunities and usage patterns. Compare token costs and performance across different LLM providers with detailed metrics to make informed decisions about model selection and routing.

Supported browsers¶

Observe works best with the latest versions of Chrome, Edge, Firefox, and Safari.