Negative Monitoring¶

“Is it down?!” is a common question among Observability teams. A strong signal for indicating if a system is down, is when it stops reporting data. Monitoring for this behavior is referred to as a “negative monitor”.

Considerations For Negative Monitoring¶

1. Host Is Down: Heartbeats and Crash Signals¶

The most effective approach to negative monitoring is converting it into a positive monitoring problem - look first for positive signals that a system is down such as:

System Crash Detection: Monitor for reliably bad states like Crash Looping in Kubernetes or Kernel Panic in Linux. You can set up a count monitor based on a log string, when count > 0.

Heartbeat Monitoring: Set up regular heartbeat signals or metric deliveries from agents to quickly indicate system failure. You can use a count style monitor to alert when count is = 0.

This provides a lower latency mechanism for determining host or container crashes and disruptions.

2. Data Is Lumpy: Using Stabilization Delays¶

Negative monitors may suffer from false positives due to late arriving data or sparse data sources. To reduce false positives from temporary data delivery issues:

Minimize transformation chains: create monitors on datasets that are earlier in the processing pipeline.

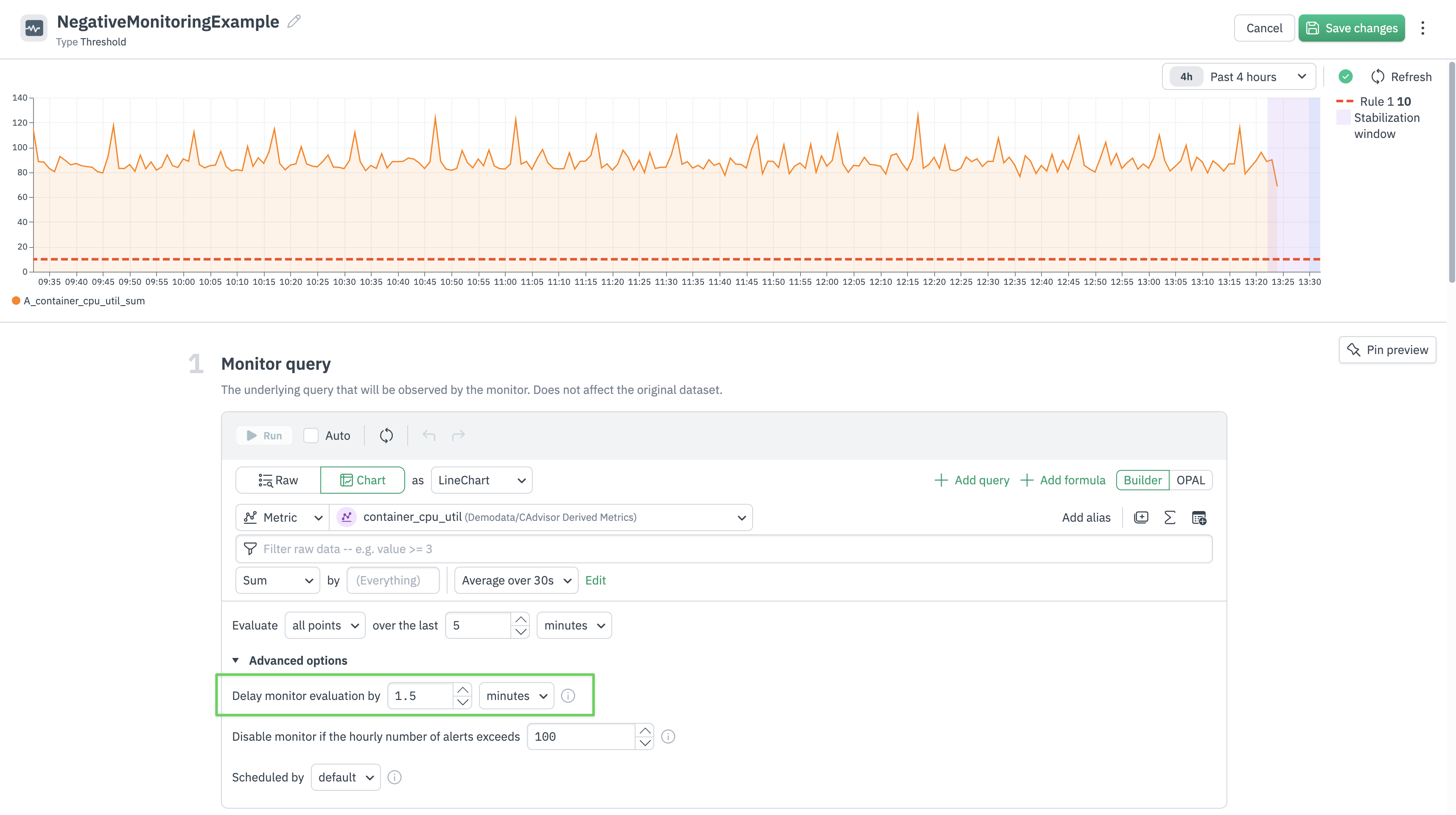

Use stabilization delays: In the monitor edit view, go to Monitor query → Advanced options → Delay monitor evaluation.

Set appropriate lookback windows: The lookback period is measured from the stabilization delay.

Example: Setting Stabilization Delay¶

If analysis shows log data typically arrives 60 seconds after creation:

Set stabilization delay to 1.5 minutes (90 seconds).

For a 10-minute lookback, the monitor evaluates data from 1.5-11.5 minutes ago.

This provides adequate time for upstream systems to deliver records and not trigger a false positive.

Figure 1 - Stabilization Delay

3. Missing Data Detection: Using the “No data” Alert Type¶

Observe supports a dedicated No data rule and severity level for negative monitoring use cases, specifically for Threshold monitors. This can be enabled for all Threshold Monitors. The evaluation period for the No data severity is tied to the evaluation settings for the Monitor query. By changing the evaluation settings for the monitor query, the trigger condition for No data severity will also be updated.

Key features:

Dedicated alert rule: Configure

No dataalerts separate from threshold-based alerts.Configurable missing data window: Set the duration for which data must be missing before alerting.

Integration with existing alert policies:

No dataalerts work in addition to existing threshold based rules.

No Data Alert Lifecycle¶

Threshold monitors that use the No data rule have a default expiry that is the lookback time of the monitor + 2 hours. If your monitor has a lookback window of 10 minutes and a no data alert fires, the maximum time that the alert will be in a triggering state is 2 hours 10 minutes. If data does not arrive for more than 2 hours plus the lookback window, the alert will stop triggering.

Triggering will only occur again after data starts to arrive, as this resets the alert conditions.

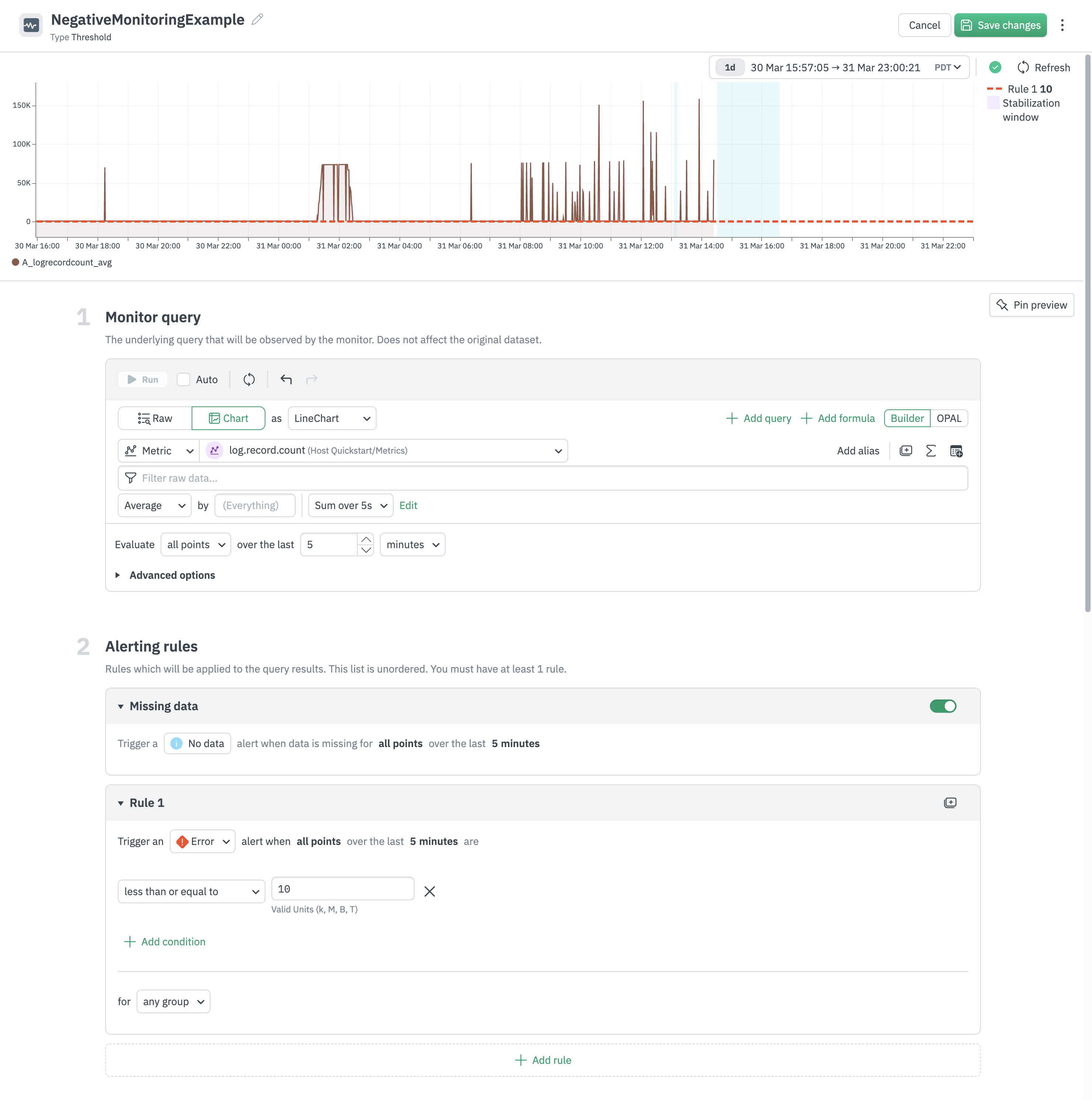

Example - Log Record Count Negative Monitor¶

With the new No data severity type, you can create negative monitors via a single click. In the screenshot, the monitor is configured to evaluate the metric log_record.count. The evaluation criteria is to evaluate all points over the last 5 minutes.

The Missing data alerting rule has been enabled, and will now alert when Observe detects that no data has arrived in the last 5 minutes. This is indicated in the preview via the light-blue overlay.

You can configure additional threshold rules as well, in this example an Error severity alert will be emitted if the log_record.count metric is less than or equal to 10 in the last 5 minutes, for all points.

The steps for converting an existing Threshold monitor to support a negative monitoring use case are:

Configure your monitor query to track the metric of interest.

Under “Alerting rules”, select the “Missing data” option.

Configure

No datato be consumed by an existing or new action.

Figure 2 - Negative Monitor Example

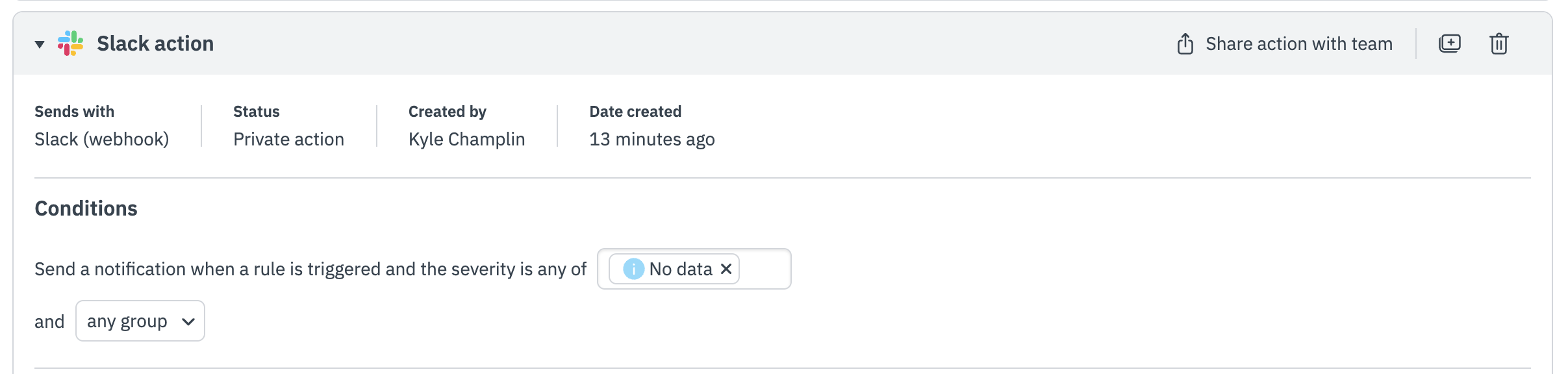

The No data severity can then be used in an action condition to route the No data/negative monitoring alert to the proper team and tool.

Figure 3 - Negative Monitor Routing