Observe AI SRE¶

Note

This is a private preview feature. Please contact your Observe representative for more information or open Docs & Support → Contact Support in the product and let us know.

Observe AI SRE is your AI-powered site reliability engineering (SRE) observability assistant. Use AI SRE to help you investigate incidents, debug slow or error-prone services, and summarize observability insights across logs, metrics, and traces all through natural language.

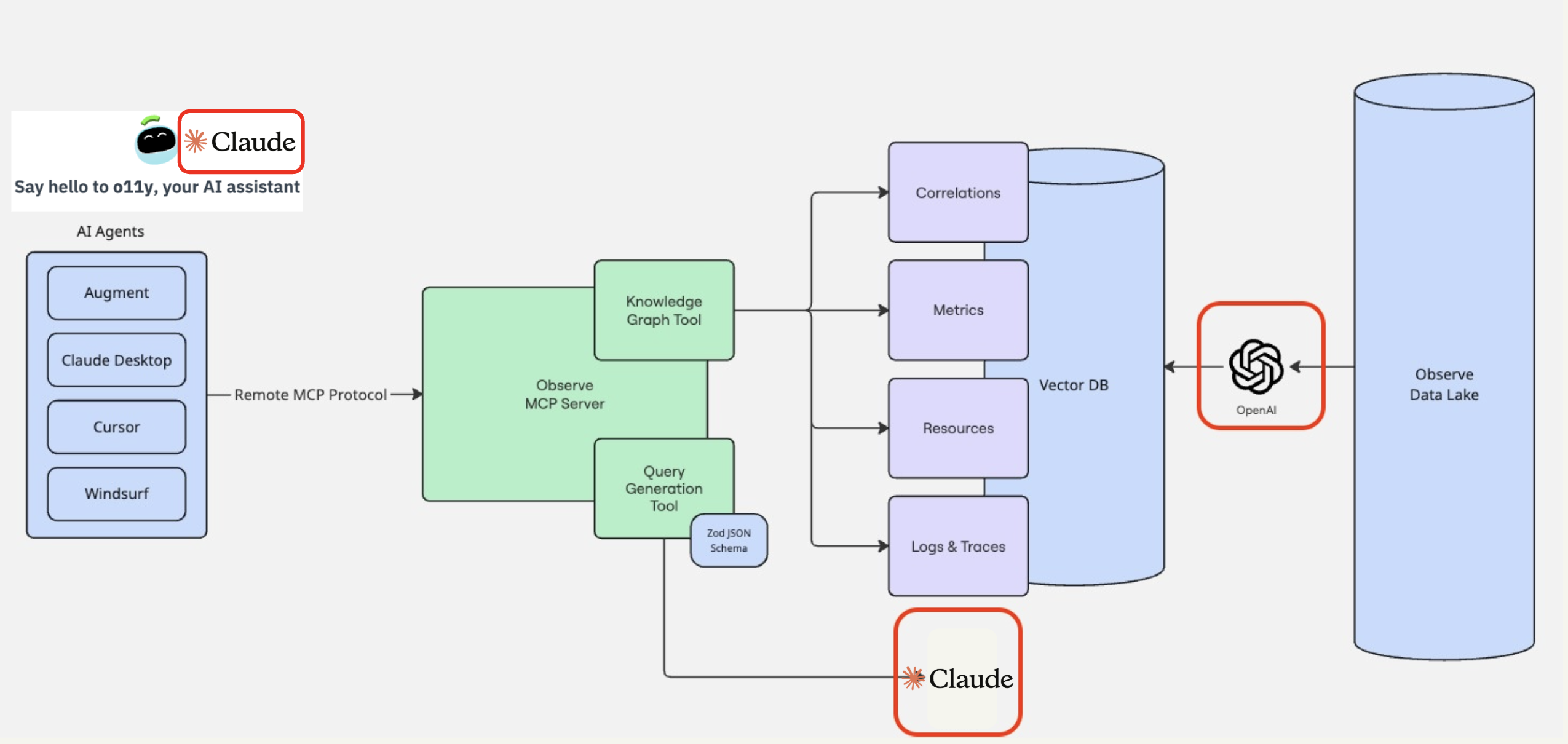

AI SRE leverage Claude and OpenAI models securely. It leverages the same Observe MCP Server used by agentic tools like Augment, Cursor, Claude Code, Claude Desktop, OpenAI Codex CLI, and Windsurf, so that AI SRE can reason directly over your telemetry data.

How it works¶

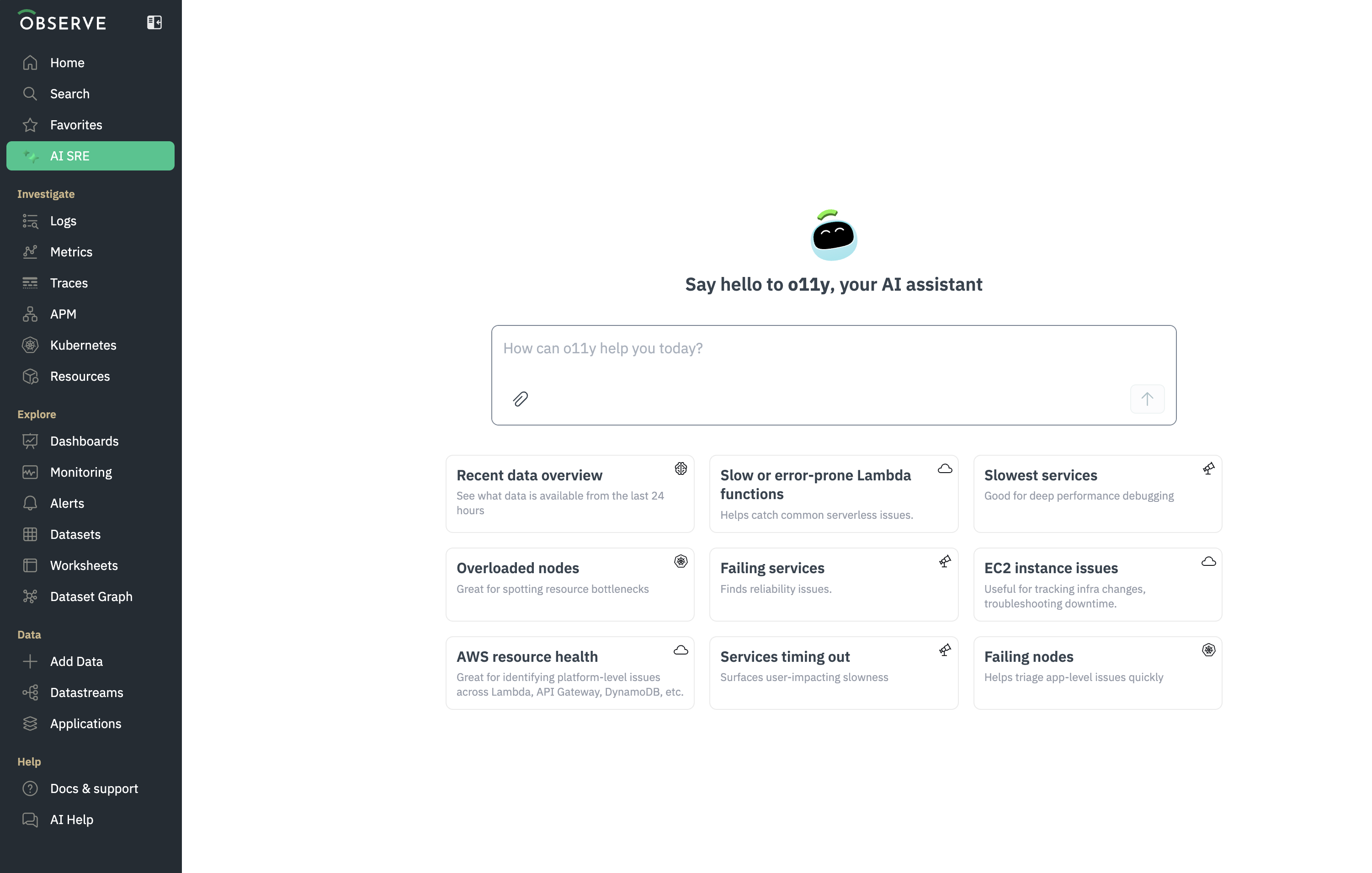

Access AI SRE by selecting AI SRE in the navigation bar.

Begin by asking AI SRE a question in the text field, such as Why did latency spike last night? or Show the slowest services in my cluster.

AI SRE interprets your intent using Claude Sonnet 4.5, and calls the Observe MCP Server.

The MCP Server accesses your Knowledge Graph Tool and Query Generation Tool to find relevant datasets (logs, metrics, traces, resources, correlations).

Observe executes the query and returns summarized insights directly in the chat.

What data leaves Observe?¶

AI SRE uses the same privacy-preserving Zero Data Retention (ZDR) mechanisms as the Observe MCP Server.

All requests are processed in-memory by the model providers and never stored or used for training.

Data sent to OpenAI¶

Event |

What we send to OpenAI |

Purpose |

|---|---|---|

Knowledge graph build / refresh |

• Dataset schema (names & types) |

Allows the agent to reason over your dataset catalogue. |

Data sent to Claude¶

Event |

What we send to Claude |

Purpose |

|---|---|---|

Runtime query |

• A short dataset summary document for the candidate tables (no raw rows) |

Helps the model choose the correct dataset(s) to answer the user’s question. |

User query execution |

• User’s natural-language prompt |

Allows Claude to generate summaries, diagnostic explanations, and root-cause analyses based on Observe query results. |

OpenAI and Claude don’t train their models on your data by default, but we’d like to be transparent about the data that is sent to OpenAI and Claude when you use thr Observe AI SRE. In addition, all requests use Zero Data Retention (ZDR) for both OpenAI and for Claude — data is processed in-memory by OpenAI and Claude and never stored or logged.

Prerequisites¶

AI SRE cannot see or query any dataset that the user’s Observe role does not already grant access to.

Try these questions¶

Try asking AI SRE the following questions to get started:

Why did error rate increase after deployment?

Show top 5 services by latency this morning.

Which Lambda functions are slow or error-prone?

What resources had CPU bottlenecks?

Summarize service health in the last 24 hours.