AWS data collection¶

Data can be pushed or pulled from your Amazon Web Services (AWS) environments into Observe. This page provides information to help you decide if it’s better for you to push or pull your

This table summarizes how to collect metrics, logs and traces with both push and pull methods:

Push |

Pull |

|

|---|---|---|

Logs |

Observe Forwarder, Observe LogWriter (CloudWatch logs only) |

N/A |

Metrics |

MetricStream |

CloudWatchMetrics Poller |

Resources |

Config or ConfigSubscription |

AWSSnapshot Poller |

Push versus pull summary¶

What are the differences?

✅ handles much larger data volumes

✅ allows customers greater control (e.g. send data to multiple destinations)

❌ bulk of configuration happens on AWS side

❌ hard to debug when things go wrong

✅ greater control over configuration

✅ better debuggability

❌ higher latency / longer poll interval

❌ rate limited

❌ third party access to AWS

In summary, pull based methods tend to provide better onboarding, while push based methods tend to scale better.

Push log data into Observe¶

There are many sources of logs in AWS, such as lambda function logs, Route53 Resolver query logs, VPC Flow logs, and ECS logs.

AWS provides the following methods for collecting logs. The method used depends on the log source:

CloudWatch is the only choice available for many AWS services. However, CloudWatch can become expensive.

Writing data to S3 is common in older services such as EC2 (VPC Flow logs).

For some services, you can stream data out using Firehose. A Firehose has exactly one destination of a supported type, such as HTTP, Splunk, or S3. Given you can stream data to S3, this is preferred over writing directly to S3 for most newer services.

Logs can only be pushed into Observe. Write your logs to any S3 bucket, then Observe’s FileDrop connector can monitor that S3 bucket and automatically ingest new files.

Push or pull metrics into Observe¶

AWS funnels metrics through CloudWatch Metrics. In most cases, it’s not possible to bypass this service, and can be very expensive.

Push metrics to Observe¶

You can push metrics to a Firehose via CloudWatch Metrics Streams. The cost model for metrics streams is shown below:

CloudWatch Metrics Stream: $0.003 per 1,000 metric updates

Firehose: $0.029 per GB ingested

The Firehose cost is typically a rounding error.

The core issue with Metrics Stream is that it doesn’t provide you good options to reduce metric volume. The frequency of metric updates is 1 minute, and you can filter by:

Metric Namespace: e.g. Lambda, RDS, SQS

Metric Name: e.g. CPUUtilization, DiskReadBytes

This is problematic if you have namespaces with lots of resources (dimensions), but you only care about a few. For example, if you have 1,000 SQS queues, and there are only 8 metrics of interest per queue, you are going to have the following:

8,000 metrics per minute

$0.0024 per minute

$3.5 a day

This may not sound like a lot, but the metrics are worthless, so there is a complete disconnect with the value they provide.

Pull metrics into Observe¶

The alternative is to pull the metrics via the API using a poller instantiated via the Add Data Portal or Terraform. This is more expensive on a per metric basis:

API Request |

Cost |

|---|---|

GetMetricData |

$0.01 per 1000 metrics |

ListMetrics |

$0.01 per 1000 requests |

However, pulling data provides greater control by providing the ability to:

Reduce frequency of collection

Filter by dimensions (metric tags)

In general, pulling data works better in the following cases:

You are only interested in a subset of instances within a service.

You want to significantly reduce the collection frequency, such as collecting RDS data every 10 minutes.

The specific flow for the Observe poller is summarized below:

Call ListMetrics to fetch available metric names for a given namespace. Dimensions + metric name filters may be applied at this level.

Call GetMetricData for each of the metrics that match the filter from the ListMetrics calls.

For example, to collect CPUCreditUsage and CPUUtilization for EC2, we use ListMetrics to fetch all available EC2 metric names (ListMetrics is cheap - billed per call and the first 1 million API calls each month are free), and get CPUCreditUsage and CPUUtilization. Later, we use GetMetricData to fetch just CPUCreditUsage and CPUUtilization rather than fetch all available EC2 metrics and throw most of them away except for CPUCreditUsage and CPUUtilization .

Metrics decision tree¶

Apply the following decision tree for each service to help you determine if pulling or pushing data is the better option:

Push or pull resources into Observe¶

Push resources to Observe¶

AWS’s native service Config collects inventory data about AWS resources using the following sources:

Config snapshots are dumped to an S3 bucket.

Config updates are streamed to an SNS Topic and published to the default EventBridge Event Bus.

AWS Config is a singleton: you can only enable a single recorder per region, per account. This means that if AWS Config is already enabled, we must work on ingesting the data it produces from S3 (using Forwarder) and EventBridge (using ConfigSubscription).

The pricing model is determined by the number of configuration items. The cost per item depends on the recording mode:

Recording type |

Cost |

|---|---|

Continuous Recording |

$0.003 |

Periodic recording |

$0.012 |

We typically make use of “continuous recording”, because we want to capture configuration changes as they happen.

It is tricky to predict how much AWS Config will cost because it encompasses both number of overall resources and amount of configuration churn. In some cases, the cost of AWS Config far outweighs the perceived value. For example, CI accounts have a very high configuration churn, but a low value for observability use cases.

Many customers already have AWS Config configured for compliance purposes, in which case the incremental cost of collecting the data is negligible.

Polling to get resources into Observe¶

If customers do not have AWS Config, the best we can do is periodically scrape their API. This can be done through the AWS snapshot poller.

The poller has significant limitations relative to AWS Config:

polling is infrequent: any changes between polls will not be captured. This approximates the “Periodic recording” mode of AWS Config.

rate limited: polling issues requests against the AWS API, which is rate limited. There is an upperbound to how many resources can be scraped out in a timely fashion, and for large customers it is not uncommon to hit these limits.

only a subset of resources are supported: we need to write code for each service and resource we intend to support.

data schemas differ: we just dump the data the AWS API give us. This can differ significantly from the AWS Config schemas. We pay the cost on the transform side by having separate transformation paths for snapshot data vs Config.

On the other hand, the snapshot poller is much cheaper than paying AWS.

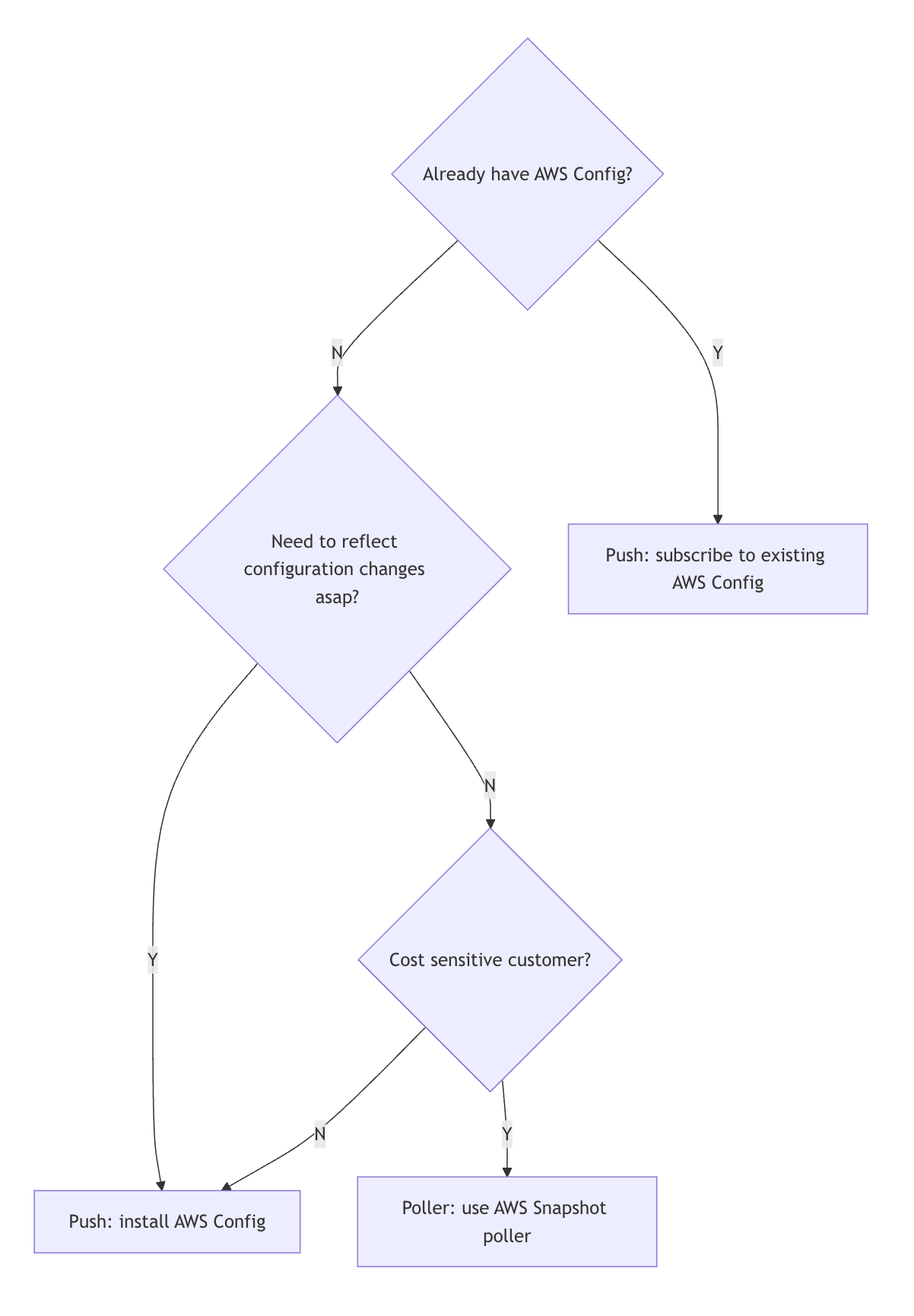

Resources decision tree¶