Configure your own OTel collector¶

This page describes the recommended way to configure a plain OTel collector to get data into Observe so that the proper Datasets are created to power core functionality in the Logs, Metrics, Trace, and Service Explorers.

Follow these instructions to get your data into Observe if you have a situation where you don’t want to use the Observe Agent.

Prerequisites¶

Verify the following assumptions and prepare for incoming data before you continue with setting up your own OTel collector.

Assumptions¶

You already have your own OTel collector running, and you are familiar with setting up the infrastructure required by the OTel collector. If either one is not true, use the Observe Agent.

The following placeholders are used throughout the document (the

$$sign helps differentiate them from variables):$$OBSERVE_OTEL_ENDPOINTindicating the customer-specific HTTP collect endpoint for OTel data, such ashttps://123456.collect.eu1.observeinc.com/v2/otelfor customer ID123456.$$OBSERVE_PROM_ENDPOINTindicating the customer-specific HTTP collect endpoint for Prometheus metrics data, such ashttps://123456.collect.eu1.observeinc.com/v1/prometheusfor customer ID123456.$$EXPLORER_PACKAGEset to eitherKubernetes Explorerfor a Kubernetes use case, orHost Explorerfor everything else. See Prepare for incoming data. The reason for this definition is that logs and Prometheus metrics are segregated in this form per current convention.

Your existing OTel collectors have receivers set up corresponding to the type of data being processed and the sources the data is coming from. In this document, the receiver types are referred to as

$$metric_receiver(s),$$trace_receiver(s), and$$log_receiver(s), along with$$otel_metric_receiver(s)and$$prom_metric_receiver(s)to differentiate between Prometheus and OTel metrics sources.

This document focused on how to set up processors and exporters into pipelines for ingesting correctly enriched data into Observe.

Where required, we’ll also suggest additional configurations to your instrumentation SDK respectively your application, but assume again that basic setup is in place to export to the corresponding OTel collector receivers.

Prepare for incoming data¶

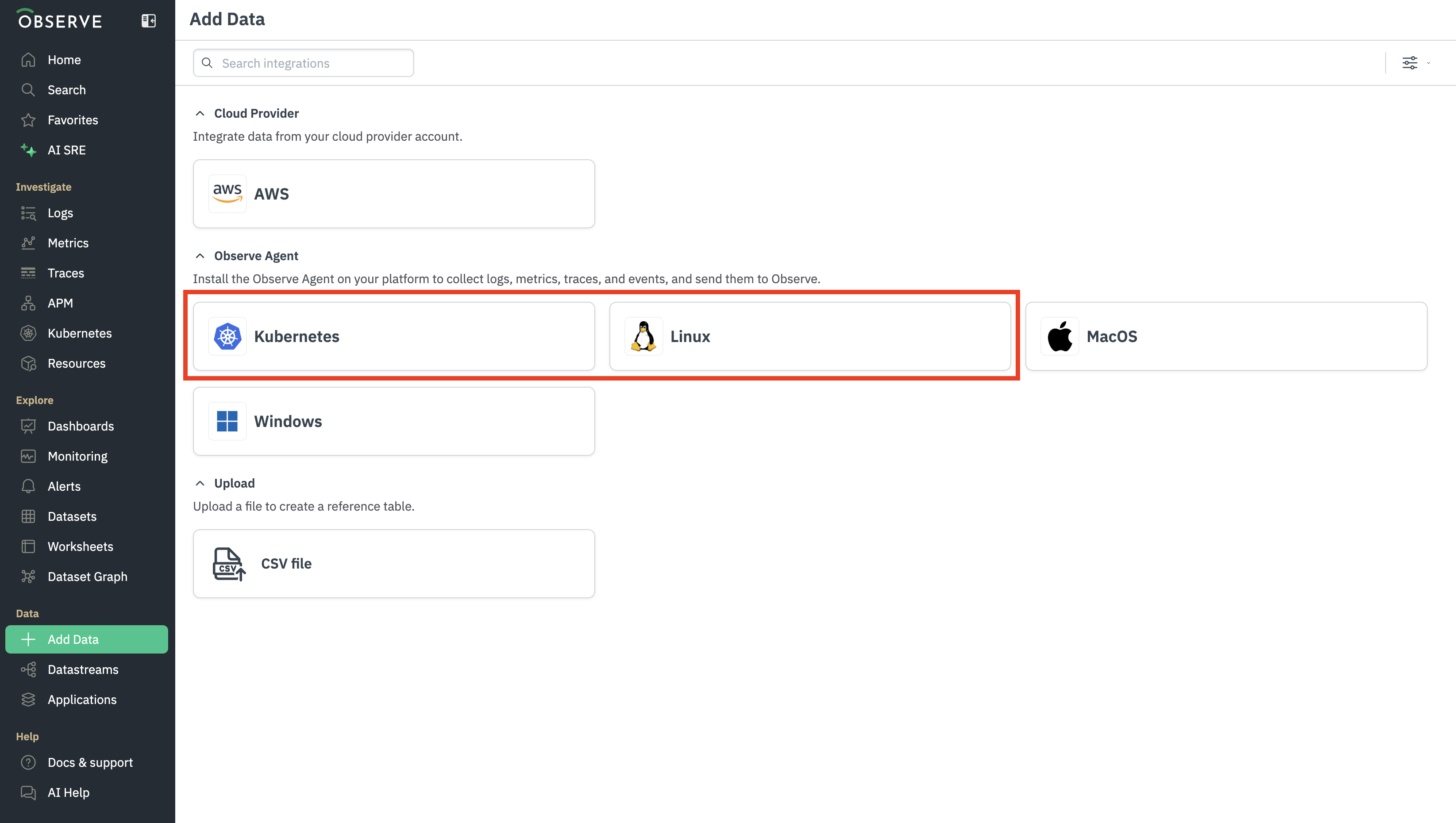

Use the Add Data portal to do the following:

Perform a one-time setup for the source datasets that will receive the incoming data.

Configure a Kubernetes integration if you want to configure the collector in a Kubernetes environment.

Configure a Linux integration for all other environments, even if you are not running on Linux.

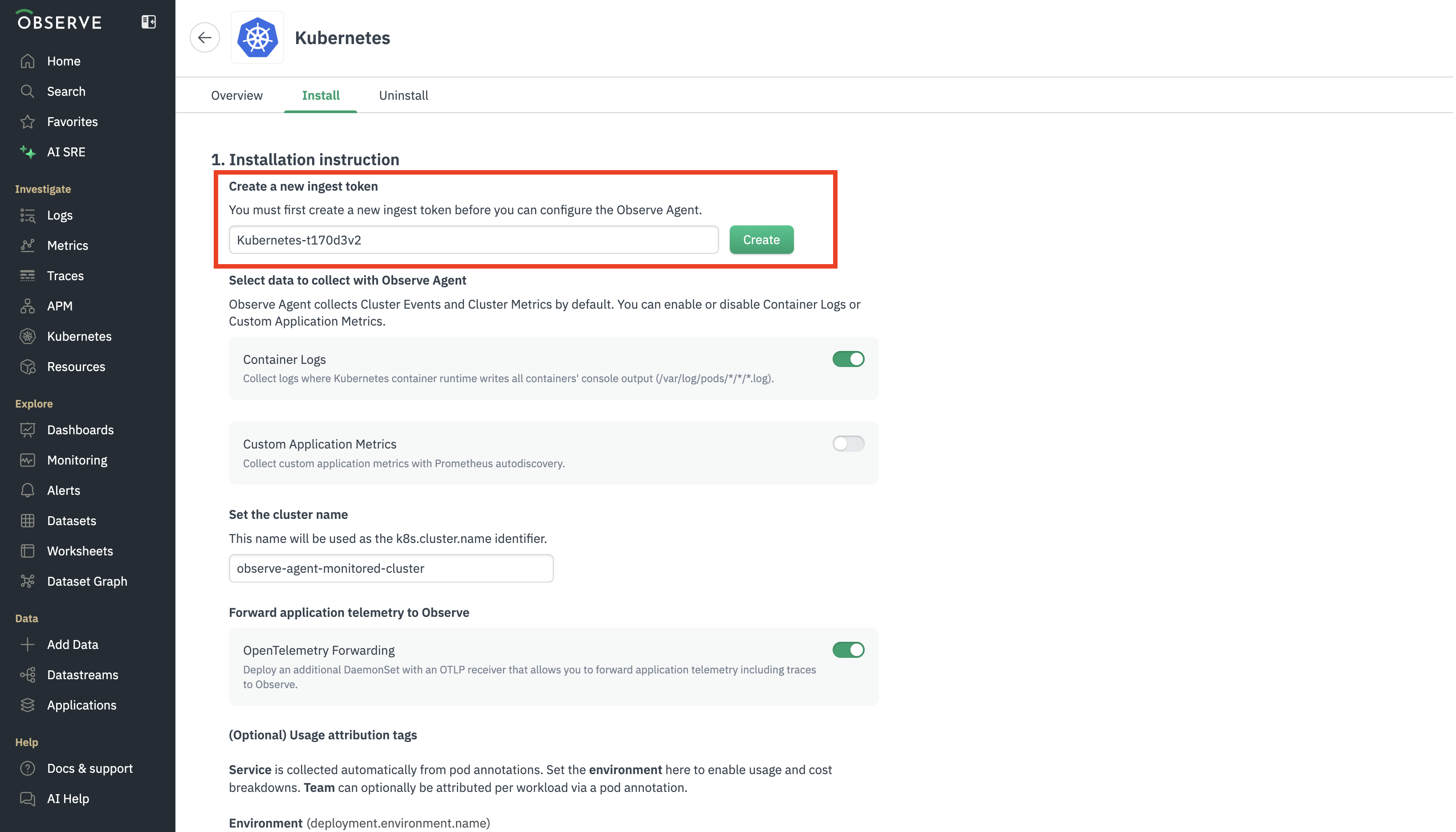

As part of this setup, you will create an ingest token to authenticate the collector with Observe. Create separate tokens for each cluster, deployment, or team.

The following example shows this step in the Kubernetes instructions:

Basic setup¶

Common Observe Agent/processor setup¶

Use an appropriate secret store to store the Ingest token and make sure the token is available to the collector from the OBSERVE_TOKEN environment variable. See Collector configuration best practices in the OpenTelemetry documentation.

Other best practices are summarized below:

Set a

memory_limiterprocessor. For example:

memory_limiter:

check_interval: 10s

limit_percentage: 80

spike_limit_percentage: 15

Set up a batch processor. For example:

batch/observe/metrics:

send_batch_max_size: 20480

send_batch_size: 16384

timeout: 1s

batch/observe/logs:

send_batch_max_size: 4096

send_batch_size: 4096

timeout: 5s

batch/observe/traces:

send_batch_max_size: 4096

send_batch_size: 4096

timeout: 5s

Add cloud resource detectors, which require the

otelcol-contribcontrib collector:

resourcedetection/cloud:

detectors: ["eks", "ecs", "ec2", "gcp", "azure"]

timeout: 2s

override: false

Kubernetes setup¶

Set up a

k8sattributesprocessor, as shown in the following example. See Important Components for Kubernetes in the OpenTelemetry documentation.

processors:

k8sattributes:

extract:

metadata:

- k8s.namespace.name

- k8s.deployment.name

- k8s.replicaset.name

- k8s.statefulset.name

- k8s.daemonset.name

- k8s.cronjob.name

- k8s.job.name

- k8s.node.name

- k8s.node.uid

- k8s.pod.name

- k8s.pod.uid

- k8s.cluster.uid

- k8s.container.name

- container.id

- service.namespace

- service.name

- service.version

- service.instance.id

otel_annotations: true

passthrough: false

pod_association:

- sources:

- from: resource_attribute

name: k8s.pod.ip

- sources:

- from: resource_attribute

name: k8s.pod.uid

- sources:

- from: connection

This is close to the standard setup vended by Observe Agent. If you’re not interested in all the information you can drop the corresponding entries from metadata respectively.

In order to power Service Explorer, the fields

service.nameandservice.namespacemust be added to any span data. They are automatically derived per Specify resource attributes using Kubernetes annotations in the OpenTelemetry documentation.Most functionality requires a cluster and deployment environment to be specified. For example:

processors:

resource/observe_common:

attributes:

- key: k8s.cluster.name

action: upsert

value: $$CLUSTER_NAME

- key: deployment.environment.name

action: upsert

value: $$ENVIRIONMENT

Others¶

Specify a

resource/observe_commonprocess. For example:

processors:

resource/observe_common:

attributes:

- key: deployment.environment.name

action: upsert

value: $$ENVIRIONMENT

Alternatively, you can set the

deployment.environment.namein your SDK or application.Add the following recommended resource detectors. This requires the

otelcol-contribcontrib collector:

resourcedetection:

detectors: [env, system]

system:

hostname_sources: ["dns", "os"]

resource_attributes:

host.id:

enabled: false

os.type:

enabled: true

host.arch:

enabled: true

host.name:

enabled: true

os.description:

enabled: true

Logs setup¶

The exporter and batch values in the following example are tuned per our recommended settings but can be adapted as needed. Batches should be below the corresponding request limits of the OTel endpoint (50mb). Additional processors, such as resourcedetection, can be added as needed.

processors:

batch/observe/logs:

send_batch_max_size: 4096

send_batch_size: 4096

timeout: 5s

exporters:

otlphttp/observe/logs:

compression: zstd

endpoint: $$OBSERVE_OTEL_ENDPOINT # e.g https://123456.collect.observeinc.com/v2/otel

headers:

authorization: Bearer ${env:OBSERVE_TOKEN} # embedd directly if not in env

x-observe-target-package: $$EXPLORER_PACKAGE # Kubernetes Explorer or Host Explorer

retry_on_failure:

enabled: true

initial_interval: 1s

max_elapsed_time: 5m

max_interval: 30s

sending_queue:

enabled: true

service:

pipelines:

logs/observe:

exporters: [otlphttp/observe/logs]

receivers: $$log_receiver(s)

processors:

- memory_limiter

# - k8sattributes # for kubernetes

# - resourcedetection # for hosts

# - resourcedetection/cloud # optional

- batch/observe/logs

- resource/observe_common

Metrics setup¶

Metrics setups depend on whether Prometheus or OTel metrics are desired. Our recommended setup is shown below:

processors:

batch/observe/metrics:

send_batch_max_size: 20480

send_batch_size: 16384

timeout: 1s

exporters:

otlphttp/observe/metrics:

compression: zstd

endpoint: $$OBSERVE_OTEL_ENDPOINT # e.g https://123456.collect.observeinc.com/v2/otel

headers:

authorization: Bearer ${env:OBSERVE_TOKEN} # embedd directly if not in env

x-observe-target-package: Metrics # Otel metrics go in a common "Metrics" package

retry_on_failure:

enabled: true

initial_interval: 1s

max_elapsed_time: 5m

max_interval: 30s

sending_queue:

enabled: true

prometheusremotewrite/observe:

endpoint: $$OBSERVE_PROM_ENDPOINT # e.g https://123456.collect.observeinc.com/v1/prometheus

headers:

authorization: Bearer ${env:OBSERVE_TOKEN} # embedd directly if not in env

x-observe-target-package: $$EXPLORER_PACKAGE # Kubernetes Explorer or Host Explorer

max_batch_request_parallelism: 10

remote_write_queue:

num_consumers: 10

resource_to_telemetry_conversion:

enabled: true

send_metadata: true

timeout: 10s

service:

pipelines:

metrics/observe/prom:

exporters: [prometheusremotewrite/observe]

receivers: $$prom_metric_receiver(s)

processors:

- memory_limiter

# - k8sattributes # for kubernetes

# - resourcedetection # for hosts

# - resourcedetection/cloud # optional

- batch/observe/metrics

- resource/observe_common

metrics/observe/otel:

exporters: [otlphttp/observe/metrics]

receivers: $$otel_metric_receiver(s)

processors:

- memory_limiter

# - k8sattributes # for kubernetes

# - resourcedetection # for hosts

# - resourcedetection/cloud # optional

- batch/observe/metrics

- resource/observe_common

In order to be able to allow for parallel writers the corresponding feature gate needs to be enabled, so the OTel collector has to be started with the following additional parameter:

--feature-gates=+exporter.prometheusremotewritexporter.EnableMultipleWorkers

We recommend this setting as it can provide significantly higher remote write throughput.

It’s also possible to send all metrics as Prometheus or OTel. When sending OTel metrics to Prometheus it’s important to enable deltacumulative processor as Prometheus doesn’t handle delta values.

Traces setup¶

Use the following configuration for traces:

processors:

batch/observe/traces:

send_batch_max_size: 4096

send_batch_size: 4096

timeout: 5s

# this is some custom observe-specific logic that simplifies http-status code lookups

# by coalescing common status code attributes

transform/add_span_status_code:

error_mode: ignore

trace_statements:

- set(span.attributes["observe.status_code"], Int(span.attributes["rpc.grpc.status_code"])) where span.attributes["observe.status_code"] == nil and span.attributes["rpc.grpc.status_code"] != nil

- set(span.attributes["observe.status_code"], Int(span.attributes["grpc.status_code"])) where span.attributes["observe.status_code"] == nil and span.attributes["grpc.status_code"] != nil

- set(span.attributes["observe.status_code"], Int(span.attributes["rpc.status_code"])) where span.attributes["observe.status_code"] == nil and span.attributes["rpc.status_code"] != nil

- set(span.attributes["observe.status_code"], Int(span.attributes["http.status_code"])) where span.attributes["observe.status_code"] == nil and span.attributes["http.status_code"] != nil

- set(span.attributes["observe.status_code"], Int(span.attributes["http.response.status_code"])) where span.attributes["observe.status_code"] == nil and span.attributes["http.response.status_code"] != nil

# This drops spans that are longer than the configured time.

# The Observe default is 1 hour to match service explorer behavior.

filter/drop_long_spans:

error_mode: ignore

traces:

span:

- (span.end_time - span.start_time) > Duration("1h")

exporters:

otlphttp/observe/traces:

compression: zstd

endpoint: $$OBSERVE_OTEL_ENDPOINT # e.g https://123456.collect.observeinc.com/v2/otel

headers:

authorization: Bearer ${env:OBSERVE_TOKEN} # embedd directly if not in env

x-observe-target-package: Tracing # Traces go in a shared Tracing package

retry_on_failure:

enabled: true

initial_interval: 1s

max_elapsed_time: 5m

max_interval: 30s

sending_queue:

enabled: true

service:

pipelines:

traces/observe:

exporters: [otlphttp/observe/traces]

receivers: $$trace_receiver(s)

processors:

- memory_limiter

- filter/drop_long_spans

# - k8sattributes # for kubernetes

# - resourcedetection # for hosts

# - resourcedetection/cloud # optional

- transform/add_span_status_code

- batch/observe/traces

- resource/observe_common

Additional setups¶

Critical (Resource) attributes for Service Explorer and other Explorers¶

While for most cases critical attributes used for our explorers, most importantly service explorer, will be auto-populated using the basic setup above (k8sattributesprocessor doing the heavy lifting), there’s some extra work needed, especially in case of plain host setups.

service.name/service.version: If not using Kubernetes withk8sattributesprocessorthe OTel SDK should specifyservice.nameandservice.versionvia the OTEL_SERVICE_NAME evironment variable or SDK-specific means. Theservice.nameis critical for Service Explorer to work at allpeer.db.name([db.](http://db.name) system/db.system.name):peer.nameis used instead ofservice.nameto produce database service call metrics in absence to trace recording in the database system itself. it can be set manually or derived from the official otel semconv values, which recent SDKs might add automatically.peer.messaging.system: likepeer.db.name,peer.messaging.systemis used instead ofservice.nameto produce remote system call metrics in absence of trace instrumentation on the target system. it has to be set manually in such case in application code.team.name: This is a default attribute for volume attribution processing and can be defined viaresource.opentelemetry.io/team.nameon a Kubernetes pod or via OTel SDK OTEL_RESOURCE_ATTRIBUTES environment variable.

RED metrics from span and traces sampling¶

In order to generate RED metrics at the collection point, before any sampling, we can leverage the official spanmetrics connector. We however also require some significant amount of custom processing due to some shortcomings of that connector around (resource) aggregation as well our own needs to normalize to some Observe-specific metrics. Below illustrates a full setup, with explanation for the specific processors inline to the configuration, assuming receiving traces from $$trace_receiver(s) and an OLTP metric exporter set up per @Metrics:

connectors:

# this is the main processing that performs the aggregation

spanmetrics:

aggregation_temporality: AGGREGATION_TEMPORALITY_DELTA

# This connector implicitly adds: service.name, span.name, span.kind, and status.code (which we rename to otel.status_code)

# https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/connector/spanmetricsconnector/connector.go#L528-L540

# we additional break down by dimensions below

dimensions:

- name: service.namespace

- name: service.version

- name: deployment.environment

- name: k8s.pod.name

- name: k8s.namespace.name

- name: peer.db.name

- name: peer.messaging.system

# consider removing this dimension if messages contain random IDs or add normalization pre-processing

- name: otel.status_description

- name: observe.status_code

histogram:

exponential:

max_size: 100

processors:

# This handles schema normalization as well as moving status to attributes so it can be a dimension in spanmetrics

transform/shape_spans_for_red_metrics:

error_mode: ignore

trace_statements:

# peer.db.name = coalesce(peer.db.name, db.system.name, db.system)

- set(span.attributes["peer.db.name"], span.attributes["db.system.name"]) where span.attributes["peer.db.name"] == nil and span.attributes["db.system.name"] != nil

- set(span.attributes["peer.db.name"], span.attributes["db.system"]) where span.attributes["peer.db.name"] == nil and span.attributes["db.system"] != nil

# deployment.environment = coalesce(deployment.environment, deployment.environment.name)

- set(resource.attributes["deployment.environment"], resource.attributes["deployment.environment.name"]) where resource.attributes["deployment.environment"] == nil and resource.attributes["deployment.environment.name"] != nil

# Needed because `spanmetrics` connector can only operate on attributes or resource attributes.

- set(span.attributes["otel.status_description"], span.status.message) where span.status.message != ""

# This regroups the metrics by the peer attributes so we can remove `service.name` from the resource when these metric attributes are present

# NB: these will be deleted from the metric attributes and added to the resource.

groupbyattrs/peers:

keys:

- peer.db.name

- peer.messaging.system

# This puts moves the peer attributes from the resource back to the datapoint after we have regrouped the metrics.

transform/fix_peer_attributes:

error_mode: ignore

metric_statements:

- set(datapoint.attributes["peer.db.name"], resource.attributes["peer.db.name"]) where resource.attributes["peer.db.name"] != nil

- set(datapoint.attributes["peer.messaging.system"], resource.attributes["peer.messaging.system"]) where resource.attributes["peer.messaging.system"] != nil

# This removes service.name for generated RED metrics associated with peer systems.

transform/remove_service_name_for_peer_metrics:

error_mode: ignore

metric_statements:

- delete_key(resource.attributes, "service.name") where datapoint.attributes["peer.db.name"] != nil or datapoint.attributes["peer.messaging.system"] != nil

# This drops spans that are not relevant for Service Explorer RED metrics.

filter/drop_span_kinds_other_than_server_and_consumer_and_peer_client:

error_mode: ignore

traces:

span:

- span.kind == SPAN_KIND_CLIENT and span.attributes["peer.messaging.system"] == nil and span.attributes["peer.db.name"] == nil and span.attributes["db.system.name"] == nil and span.attributes["db.system"] == nil

- span.kind == SPAN_KIND_UNSPECIFIED

- span.kind == SPAN_KIND_INTERNAL

- span.kind == SPAN_KIND_PRODUCER

# The spanmetrics connector puts all dimensions as attributes on the datapoint, and copies the resource attributes from an arbitrary span's resource. This cleans that up as well as handling any other renaming.

transform/fix_red_metrics_resource_attributes:

error_mode: ignore

metric_statements:

- keep_matching_keys(resource.attributes, "^(service.name|service.namespace|service.version|deployment.environment|k8s.pod.name|k8s.namespace.name)")

- delete_matching_keys(datapoint.attributes, "^(service.name|service.namespace|service.version|deployment.environment|k8s.pod.name|k8s.namespace.name)")

- set(datapoint.attributes["otel.status_code"], "OK") where datapoint.attributes["status.code"] == "STATUS_CODE_OK"

- set(datapoint.attributes["otel.status_code"], "ERROR") where datapoint.attributes["status.code"] == "STATUS_CODE_ERROR"

- delete_key(datapoint.attributes, "status.code")

service:

pipelines:

traces/spanmetrics:

exporters: [spanmetrics]

receivers: $$trace_receiver(s)

processors:

- memory_limiter # Defined in the common setup

- filter/drop_long_spans # Defined in Traces section

- filter/drop_span_kinds_other_than_server_and_consumer_and_peer_client

- transform/shape_spans_for_red_metrics

- transform/add_span_status_code # Defined in Traces section

# - k8sattributes # for kubernetes

metrics/spanmetrics:

exporters: [otlphttp/observe/metrics]

receivers: [spanmetrics]

processors:

- memory_limiter # Defined in the common setup

- groupbyattrs/peers

- transform/fix_peer_attributes

- transform/remove_service_name_for_peer_metrics

- transform/fix_red_metrics_resource_attributes

- batch/observe/metrics # Defined in the common setup

- resource/observe_common # Defined in the k8s section

# - resourcedetection/cloud # optional

Kubernetes Explorer¶

We recommend to follow the general OTel guidance described in Important Components for Kubernetes in the OpenTelemetry documentation. Those are mostly receivers that can be set up in daemonset and/or deployment/gateway mode and should be easy to combine with the processor and exporter instructions in our basic setup above. This will require some multiple workloads to be set up (vs a single one generally for host).

Kubernetes Explorer will expect some reflective metrics like CPU utilization to be flowing, so you must set up the Kubletstats reciever and Kubernetes cluster receiver to export into the Prometheus exporter (we do not currently support reading OTel metrics for this purpose).

Finally, the most critical requirement for Kubernetes Explorer is to set up the Kubernetes object receiver in a very specific way and connect it to Observe’s custom k8sattributesprocessor, which will require a custom OTel collector build and a collector configuration similar to the example below, sent to a custom k8s_entity endpoint in Observe.

Below is a Kubernetes object processing pipeline example (”k8s entity”):

processors:

observek8sattributes:

transform/cluster:

error_mode: ignore

log_statements:

- context: log

statements:

- set(attributes["observe_filter"], "objects_pull_watch")

- set(attributes["observe_transform"]["control"]["isDelete"], true) where body["object"]

!= nil and body["type"] == "DELETED"

- set(attributes["observe_transform"]["control"]["debug_source"], "watch") where

body["object"] != nil and body["type"] != nil

- set(attributes["observe_transform"]["control"]["debug_source"], "pull") where

body["object"] == nil or body["type"] == nil

- set(body, body["object"]) where body["object"] != nil and body["type"] !=

nil

- set(attributes["observe_transform"]["valid_from"], observed_time_unix_nano)

- set(attributes["observe_transform"]["valid_to"], Int(observed_time_unix_nano)

+ 5400000000000)

- set(attributes["observe_transform"]["kind"], "Cluster")

- set(attributes["observe_transform"]["control"]["isDelete"], false) where attributes["observe_transform"]["control"]["isDelete"]

== nil

- set(attributes["observe_transform"]["control"]["version"], body["metadata"]["resourceVersion"])

- set(attributes["observe_transform"]["identifiers"]["clusterName"], resource.attributes["k8s.cluster.name"])

- set(attributes["observe_transform"]["identifiers"]["clusterUid"], resource.attributes["k8s.cluster.uid"])

- set(attributes["observe_transform"]["identifiers"]["uid"], resource.attributes["k8s.cluster.uid"])

- set(attributes["observe_transform"]["identifiers"]["kind"], "Cluster")

- set(attributes["observe_transform"]["identifiers"]["name"], resource.attributes["k8s.cluster.name"])

- set(attributes["observe_transform"]["facets"]["creationTimestamp"], body["metadata"]["creationTimestamp"])

- set(attributes["observe_transform"]["facets"]["labels"], body["metadata"]["labels"])

- set(attributes["observe_transform"]["facets"]["annotations"], body["metadata"]["annotations"])

- set(body, "")

transform/object:

error_mode: ignore

log_statements:

- context: log

statements:

- set(attributes["observe_filter"], "objects_pull_watch")

- set(attributes["observe_transform"]["valid_from"], observed_time_unix_nano)

- set(attributes["observe_transform"]["valid_to"], Int(observed_time_unix_nano)

+ 5400000000000)

- set(attributes["observe_transform"]["kind"], body["kind"])

- set(attributes["observe_transform"]["control"]["isDelete"], false) where attributes["observe_transform"]["control"]["isDelete"]

== nil

- set(attributes["observe_transform"]["control"]["version"], body["metadata"]["resourceVersion"])

- set(attributes["observe_transform"]["control"]["agentVersion"], "2.5.1-7b74e914-nightly")

- set(attributes["observe_transform"]["identifiers"]["clusterName"], resource.attributes["k8s.cluster.name"])

- set(attributes["observe_transform"]["identifiers"]["clusterUid"], resource.attributes["k8s.cluster.uid"])

- set(attributes["observe_transform"]["identifiers"]["kind"], body["kind"])

- set(attributes["observe_transform"]["identifiers"]["name"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["namespaceName"], body["metadata"]["namespace"])

- set(attributes["observe_transform"]["identifiers"]["uid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["creationTimestamp"], body["metadata"]["creationTimestamp"])

- set(attributes["observe_transform"]["facets"]["deletionTimestamp"], body["metadata"]["deletionTimestamp"])

- set(attributes["observe_transform"]["facets"]["ownerRefKind"], body["metadata"]["ownerReferences"][0]["kind"])

- set(attributes["observe_transform"]["facets"]["ownerRefName"], body["metadata"]["ownerReferences"][0]["name"])

- set(attributes["observe_transform"]["facets"]["labels"], body["metadata"]["labels"])

- set(attributes["observe_transform"]["facets"]["annotations"], body["metadata"]["annotations"])

- set(attributes["observe_transform"]["facets"]["replicaSetName"], body["metadata"]["ownerReferences"][0]["name"])

where body["metadata"]["ownerReferences"][0]["kind"] == "ReplicaSet"

- set(attributes["observe_transform"]["facets"]["daemonSetName"], body["metadata"]["ownerReferences"][0]["name"])

where body["metadata"]["ownerReferences"][0]["kind"] == "DaemonSet"

- set(attributes["observe_transform"]["facets"]["jobName"], body["metadata"]["ownerReferences"][0]["name"])

where body["metadata"]["ownerReferences"][0]["kind"] == "Job"

- set(attributes["observe_transform"]["facets"]["cronJobName"], attributes["observe_transform"]["facets"]["jobName"])

where IsMatch(attributes["observe_transform"]["facets"]["jobName"], ".*-\\d{8}$")

- replace_pattern(attributes["observe_transform"]["facets"]["cronJobName"],

"-\\d{8}$$", "")

- set(attributes["observe_transform"]["facets"]["statefulSetName"], body["metadata"]["ownerReferences"][0]["name"])

where body["metadata"]["ownerReferences"][0]["kind"] == "StatefulSet"

- set(attributes["observe_transform"]["facets"]["deploymentName"], body["metadata"]["ownerReferences"][0]["name"])

where body["metadata"]["ownerReferences"][0]["kind"] == "ReplicaSet"

- replace_pattern(attributes["observe_transform"]["facets"]["deploymentName"],

"^(.*)-[0-9a-f]+$$", "$$1")

- set(attributes["observe_transform"]["facets"]["deploymentName"], body["metadata"]["ownerReferences"][0]["name"])

where body["metadata"]["ownerReferences"][0]["kind"] == "Deployment"

- conditions:

- body["kind"] == "Pod"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["podName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["podUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["phase"], body["status"]["phase"])

- set(attributes["observe_transform"]["facets"]["podIP"], body["status"]["podIP"])

- set(attributes["observe_transform"]["facets"]["qosClass"], body["status"]["qosClass"])

- set(attributes["observe_transform"]["facets"]["startTime"], body["status"]["startTime"])

- set(attributes["observe_transform"]["facets"]["readinessGates"], body["object"]["spec"]["readinessGates"])

- set(attributes["observe_transform"]["facets"]["nodeName"], body["spec"]["nodeName"])

- conditions:

- body["kind"] == "Namespace"

context: log

statements:

- set(attributes["observe_transform"]["facets"]["status"], body["status"]["phase"])

- set(attributes["observe_transform"]["identifiers"]["namespaceName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["namespaceUid"], body["metadata"]["uid"])

- conditions:

- body["kind"] == "Node"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["nodeName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["nodeUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["kernelVersion"], body["status"]["nodeInfo"]["kernelVersion"])

- set(attributes["observe_transform"]["facets"]["kubeProxyVersion"], body["status"]["nodeInfo"]["kubeProxyVersion"])

- set(attributes["observe_transform"]["facets"]["kubeletVersion"], body["status"]["nodeInfo"]["kubeletVersion"])

- set(attributes["observe_transform"]["facets"]["osImage"], body["status"]["nodeInfo"]["osImage"])

- set(attributes["observe_transform"]["facets"]["taints"], body["spec"]["taints"])

- conditions:

- body["kind"] == "Deployment"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["deploymentName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["deploymentUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["updatedReplicas"], body["status"]["updatedReplicas"])

- set(attributes["observe_transform"]["facets"]["availableReplicas"], body["status"]["availableReplicas"])

- set(attributes["observe_transform"]["facets"]["readyReplicas"], body["status"]["readyReplicas"])

- set(attributes["observe_transform"]["facets"]["readyReplicas"], 0) where attributes["observe_transform"]["facets"]["readyReplicas"]

== nil

- set(attributes["observe_transform"]["facets"]["unavailableReplicas"], body["status"]["unavailableReplicas"])

- set(attributes["observe_transform"]["facets"]["selector"], body["spec"]["selector"]["matchLabels"])

- set(attributes["observe_transform"]["facets"]["desiredReplicas"], body["spec"]["replicas"])

- set(attributes["observe_transform"]["facets"]["status"], "Healthy")

- set(attributes["observe_transform"]["facets"]["status"], "Unhealthy") where

attributes["observe_transform"]["facets"]["readyReplicas"] != attributes["observe_transform"]["facets"]["desiredReplicas"]

- conditions:

- body["kind"] == "ReplicaSet"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["replicaSetName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["replicaSetUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["desiredReplicas"], body["spec"]["replicas"])

- set(attributes["observe_transform"]["facets"]["currentReplicas"], body["status"]["replicas"])

- set(attributes["observe_transform"]["facets"]["availableReplicas"], body["status"]["availableReplicas"])

- set(attributes["observe_transform"]["facets"]["readyReplicas"], body["status"]["readyReplicas"])

- set(attributes["observe_transform"]["facets"]["readyReplicas"], 0) where attributes["observe_transform"]["facets"]["readyReplicas"]

== nil

- set(attributes["observe_transform"]["facets"]["status"], "Healthy")

- set(attributes["observe_transform"]["facets"]["status"], "Unhealthy") where

attributes["observe_transform"]["facets"]["readyReplicas"] != attributes["observe_transform"]["facets"]["desiredReplicas"]

- conditions:

- body["kind"] == "Event"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["involvedObjectKind"],

body["involvedObject"]["kind"])

- set(attributes["observe_transform"]["identifiers"]["involvedObjectName"],

body["involvedObject"]["name"])

- set(attributes["observe_transform"]["identifiers"]["involvedObjectUid"], body["involvedObject"]["uid"])

- set(attributes["observe_transform"]["facets"]["firstTimestamp"], body["firstTimestamp"])

- set(attributes["observe_transform"]["facets"]["lastTimestamp"], body["lastTimestamp"])

- set(attributes["observe_transform"]["facets"]["message"], body["message"])

- set(attributes["observe_transform"]["facets"]["reason"], body["reason"])

- set(attributes["observe_transform"]["facets"]["count"], body["count"])

- set(attributes["observe_transform"]["facets"]["type"], body["type"])

- set(attributes["observe_transform"]["facets"]["sourceComponent"], body["source"]["component"])

- conditions:

- body["kind"] == "Job"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["jobName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["jobUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["cronJobName"], body["metadata"]["ownerReferences"][0]["name"])

where body["metadata"]["ownerReferences"][0]["kind"] == "CronJob"

- set(attributes["observe_transform"]["facets"]["cronJobUid"], body["metadata"]["ownerReferences"][0]["uid"])

where body["metadata"]["ownerReferences"][0]["kind"] == "CronJob"

- set(attributes["observe_transform"]["facets"]["completions"], body["spec"]["completions"])

- set(attributes["observe_transform"]["facets"]["parallelism"], body["spec"]["parallelism"])

- set(attributes["observe_transform"]["facets"]["activeDeadlineSeconds"], body["spec"]["activeDeadlineSeconds"])

- set(attributes["observe_transform"]["facets"]["backoffLimit"], body["spec"]["backoffLimit"])

- set(attributes["observe_transform"]["facets"]["startTime"], body["status"]["startTime"])

- set(attributes["observe_transform"]["facets"]["activePods"], body["status"]["active"])

- set(attributes["observe_transform"]["facets"]["failedPods"], body["status"]["falied"])

- set(attributes["observe_transform"]["facets"]["succeededPods"], body["status"]["succeeded"])

- set(attributes["observe_transform"]["facets"]["readyPods"], body["status"]["ready"])

- conditions:

- body["kind"] == "CronJob"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["cronJobName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["cronJobUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["schedule"], body["spec"]["schedule"])

- set(attributes["observe_transform"]["facets"]["suspend"], "Active") where

body["spec"]["suspend"] == false

- set(attributes["observe_transform"]["facets"]["suspend"], "Suspend") where

body["spec"]["suspend"] == true

- conditions:

- body["kind"] == "DaemonSet"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["daemonSetName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["daemonSetUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["updateStrategy"], body["spec"]["updateStrategy"]["type"])

- set(attributes["observe_transform"]["facets"]["maxUnavailable"], body["spec"]["updateStrategy"]["rollingUpdate"]["maxUnavailable"])

- set(attributes["observe_transform"]["facets"]["maxSurge"], body["spec"]["updateStrategy"]["rollingUpdate"]["maxSurge"])

- set(attributes["observe_transform"]["facets"]["numberReady"], body["status"]["numberReady"])

- set(attributes["observe_transform"]["facets"]["desiredNumberScheduled"], body["status"]["desiredNumberScheduled"])

- set(attributes["observe_transform"]["facets"]["currentNumberScheduled"], body["status"]["currentNumberScheduled"])

- set(attributes["observe_transform"]["facets"]["updatedNumberScheduled"], body["status"]["updatedNumberScheduled"])

- set(attributes["observe_transform"]["facets"]["numberAvailable"], body["status"]["numberAvailable"])

- set(attributes["observe_transform"]["facets"]["numberUnavailable"], body["status"]["numberUnavailable"])

- set(attributes["observe_transform"]["facets"]["numberMisscheduled"], body["status"]["numberMisscheduled"])

- set(attributes["observe_transform"]["facets"]["status"], "Healthy")

- set(attributes["observe_transform"]["facets"]["status"], "Unhealthy") where

attributes["observe_transform"]["facets"]["numberReady"] != attributes["observe_transform"]["facets"]["desiredNumberScheduled"]

- conditions:

- body["kind"] == "StatefulSet"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["statefulSetName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["statefulSetUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["serviceName"], body["spec"]["serviceName"])

- set(attributes["observe_transform"]["facets"]["podManagementPolicy"], body["spec"]["podManagementPolicy"])

- set(attributes["observe_transform"]["facets"]["desiredReplicas"], body["spec"]["replicas"])

- set(attributes["observe_transform"]["facets"]["updateStrategy"], body["spec"]["updateStrategy"]["type"])

- set(attributes["observe_transform"]["facets"]["partition"], body["spec"]["updateStrategy"]["rollingUpdate"]["partition"])

- set(attributes["observe_transform"]["facets"]["currentReplicas"], body["status"]["currentReplicas"])

- set(attributes["observe_transform"]["facets"]["readyReplicas"], body["status"]["readyReplicas"])

- set(attributes["observe_transform"]["facets"]["status"], "Healthy")

- set(attributes["observe_transform"]["facets"]["status"], "Unhealthy") where

attributes["observe_transform"]["facets"]["readyReplicas"] != attributes["observe_transform"]["facets"]["desiredReplicas"]

- conditions:

- body["kind"] == "ConfigMap"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["configMapName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["configMapUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["immutable"], body["immutable"])

- conditions:

- body["kind"] == "Secret"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["secretName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["secretUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["immutable"], body["immutable"])

- set(attributes["observe_transform"]["facets"]["data"], body["data"])

- set(attributes["observe_transform"]["facets"]["type"], body["type"])

- conditions:

- body["kind"] == "PersistentVolume"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["persistentVolumeName"],

body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["persistentVolumeUid"],

body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["capacity"], body["spec"]["capacity"]["storage"])

- set(attributes["observe_transform"]["facets"]["reclaimPolicy"], body["spec"]["persistentVolumeReclaimPolicy"])

- set(attributes["observe_transform"]["facets"]["class"], body["spec"]["storageClassName"])

- set(attributes["observe_transform"]["facets"]["accessModes"], body["spec"]["accessModes"])

- set(attributes["observe_transform"]["facets"]["volumeMode"], body["spec"]["volumeMode"])

- set(attributes["observe_transform"]["facets"]["phase"], body["status"]["phase"])

- conditions:

- body["kind"] == "PersistentVolumeClaim"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["persistentVolumeClaimName"],

body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["persistentVolumeClaimUid"],

body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["capacityRequests"], body["spec"]["resources"]["requests"]["storage"])

- set(attributes["observe_transform"]["facets"]["capacityLimits"], body["spec"]["resources"]["limits"]["storage"])

- set(attributes["observe_transform"]["facets"]["class"], body["spec"]["storageClassName"])

- set(attributes["observe_transform"]["facets"]["volumeName"], body["spec"]["volumeName"])

- set(attributes["observe_transform"]["facets"]["desiredAccessModes"], body["spec"]["accessModes"])

- set(attributes["observe_transform"]["facets"]["volumeMode"], body["spec"]["volumeMode"])

- set(attributes["observe_transform"]["facets"]["capacity"], body["status"]["capacity"]["storage"])

- set(attributes["observe_transform"]["facets"]["phase"], body["status"]["phase"])

- set(attributes["observe_transform"]["facets"]["currentAccessModes"], body["spec"]["accessModes"])

- conditions:

- body["kind"] == "Service"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["serviceName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["serviceUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["serviceType"], body["spec"]["type"])

- set(attributes["observe_transform"]["facets"]["clusterIP"], body["spec"]["clusterIP"])

- set(attributes["observe_transform"]["facets"]["externalIPs"], body["spec"]["externalIPs"])

- set(attributes["observe_transform"]["facets"]["sessionAffinity"], body["spec"]["sessionAffinity"])

- set(attributes["observe_transform"]["facets"]["externalName"], body["spec"]["externalName"])

- set(attributes["observe_transform"]["facets"]["externalTrafficPolicy"], body["spec"]["externalTrafficPolicy"])

- set(attributes["observe_transform"]["facets"]["ipFamilies"], "IPv4,IPv6")

where Len(body["spec"]["ipFamilies"]) == 2

- set(attributes["observe_transform"]["facets"]["ipFamilies"], body["spec"]["ipFamilies"][0])

where Len(body["spec"]["ipFamilies"]) == 1

- conditions:

- body["kind"] == "Ingress"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["ingressName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["ingressUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["class"], body["spec"]["ingressClassName"])

- set(attributes["observe_transform"]["facets"]["class"], body["metadata"]["annotations"]["kubernetes.io/ingress.class"])

where attributes["observe_transform"]["facets"]["class"] == nil

- conditions:

- body["kind"] == "RoleBinding"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["roleBindingName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["roleBindingUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["subjects"], body["subjects"])

- set(attributes["observe_transform"]["facets"]["role"], body["roleRef"]["name"])

- conditions:

- body["kind"] == "Role"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["roleName"], body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["roleUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["rules"], body["rules"])

- set(attributes["observe_transform"]["facets"]["role"], body["roleRef"]["name"])

- conditions:

- body["kind"] == "ClusterRoleBinding"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["clusterRoleBindingName"],

body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["clusterRoleBindingUid"],

body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["subjects"], body["subjects"])

- set(attributes["observe_transform"]["facets"]["role"], body["roleRef"]["name"])

- conditions:

- body["kind"] == "ServiceAccount"

context: log

statements:

- set(attributes["observe_transform"]["identifiers"]["serviceAccountName"],

body["metadata"]["name"])

- set(attributes["observe_transform"]["identifiers"]["serviceAccountUid"], body["metadata"]["uid"])

- set(attributes["observe_transform"]["facets"]["automountToken"], body["automountServiceAccountToken"])

transform/unify:

error_mode: ignore

log_statements:

- context: log

statements:

- set(attributes["observe_transform"]["control"]["isDelete"], true) where body["object"]

!= nil and body["type"] == "DELETED"

- set(attributes["observe_transform"]["control"]["debug_source"], "watch") where

body["object"] != nil and body["type"] != nil

- set(attributes["observe_transform"]["control"]["debug_source"], "pull") where

body["object"] == nil or body["type"] == nil

- set(body, body["object"]) where body["object"] != nil and body["type"] !=

nil

receivers:

k8sobjects/cluster:

auth_type: serviceAccount

objects:

- interval: 15m

mode: pull

name: namespaces

k8sobjects/objects:

auth_type: serviceAccount

objects:

- interval: 15m

mode: pull

name: events

- mode: watch

name: events

- interval: 15m

mode: pull

name: pods

- mode: watch

name: pods

- interval: 15m

mode: pull

name: namespaces

- mode: watch

name: namespaces

- interval: 15m

mode: pull

name: nodes

- mode: watch

name: nodes

- interval: 15m

mode: pull

name: deployments

- mode: watch

name: deployments

- interval: 15m

mode: pull

name: replicasets

- mode: watch

name: replicasets

- interval: 15m

mode: pull

name: configmaps

- mode: watch

name: configmaps

- interval: 15m

mode: pull

name: endpoints

- mode: watch

name: endpoints

- interval: 15m

mode: pull

name: jobs

- mode: watch

name: jobs

- interval: 15m

mode: pull

name: cronjobs

- mode: watch

name: cronjobs

- interval: 15m

mode: pull

name: daemonsets

- mode: watch

name: daemonsets

- interval: 15m

mode: pull

name: statefulsets

- mode: watch

name: statefulsets

- interval: 15m

mode: pull

name: services

- mode: watch

name: services

- interval: 15m

mode: pull

name: ingresses

- mode: watch

name: ingresses

- interval: 15m

mode: pull

name: secrets

- mode: watch

name: secrets

- interval: 15m

mode: pull

name: persistentvolumeclaims

- mode: watch

name: persistentvolumeclaims

- interval: 15m

mode: pull

name: persistentvolumes

- mode: watch

name: persistentvolumes

- interval: 15m

mode: pull

name: storageclasses

- mode: watch

name: storageclasses

- interval: 15m

mode: pull

name: roles

- mode: watch

name: roles

- interval: 15m

mode: pull

name: rolebindings

- mode: watch

name: rolebindings

- interval: 15m

mode: pull

name: clusterroles

- mode: watch

name: clusterroles

- interval: 15m

mode: pull

name: clusterrolebindings

- mode: watch

name: clusterrolebindings

- interval: 15m

mode: pull

name: serviceaccounts

- mode: watch

name: serviceaccounts

exporters:

otlphttp/observe/entity:

compression: zstd

# specify fully custom log endpoint

logs_endpoint: $$OBSERVE_OTEL_ENDPOINT/v1/kubernetes/v1/entity # e.g https://123456.collect.observeinc.com/v1/kubernetes/v1/entity

headers:

authorization: Bearer ${env:OBSERVE_TOKEN} # embedd directly if not in env

x-observe-target-package: Kubernetes Explorer

retry_on_failure:

enabled: true

initial_interval: 1s

max_elapsed_time: 5m

max_interval: 30s

sending_queue:

enabled: true

service:

pipelines:

logs/cluster:

exporters:

- otlphttp/observe/entity

processors:

- memory_limiter

- batch

- resource/observe_common

- filter/cluster

- transform/cluster

receivers:

- k8sobjects/cluster

logs/objects:

exporters:

- otlphttp/observe/entity

processors:

- memory_limiter

- batch

- resource/observe_common

- transform/unify

- observek8sattributes

- transform/object

receivers:

- k8sobjects/objects

Other setups¶

The Observe Agent setup instructions for Amazon ECS can be used as a guide for particular setups:

Full examples¶

See the complete examples provided in the following links: