Amazon Web Services (AWS) Integration [Legacy]¶

The Observe AWS Integration streamlines the process of collecting data from AWS. Ingest logs and metrics from several common AWS services at once, then ingest data from additional services by sending it to the forwarders set up by the AWS Integration.

The AWS Integration works with the datasets in your workspace. Contact Observe for assistance with creating datasets and modeling the relationships between them. Observe can automate many common data modeling tasks for you, ensuring an accurate picture of your infrastructure. Observe can also update your workspace with improved and new datasets when new functionality releases for this integration.

If you currently ingest AWS data, consult with your Observe Data Engineer to see if the AWS Integration could enhance your existing data collection strategy.

What data does Observe ingest?¶

Standard Ingest Sources¶

The AWS Integration automatically ingests the following types of data from a single region:

CloudTrail logs of AWS API calls

CloudWatch Metrics streams with metrics for services you use

EventBridge state change events from applications and services

In addition, if installed in us-east-1, Observe also ingests Amazon CloudFront data for all regions:

CloudFront requests, errors, and (optional) real-time access logs for CloudFront Distributions.

For more about configuring CloudFront ingestion, see Amazon CloudFront.

To ingest this data, the AWS Integration creates several forwarding paths:

These forwarders work in a single region, as many AWS services are specific to a particular region. For information about multi-region collection, see Collecting data from multiple regions? in the FAQ.

Additional Ingest Sources¶

With these previous sources configured and working, add additional services by configuring them to write to the bucket or send logs to one of the forwarders. Details for common services may be found in Observe documentation:

API Gateway execution and access logs from your REST API

CloudWatch logs from EC2, Route53, and other services

S3 access logs for requests to S3 buckets

Supported Amazon and AWS Services¶

| Category | Services | Category | Services |

|---|---|---|---|

| Analytics |

|

Management and Governance |

|

| App Integration |

|

Networking and Content Delivery |

|

| Compute |

|

Security, Identity and Compliance |

|

| Containers |

|

Serverless |

|

| Database |

|

Storage |

|

Using AWS Integration data¶

After shaping, the incoming data populates datasets such as these:

CloudWatch Log Group - Application errors, log event detail

IAM

IAM Group - The IAM groups access resources

IAM Policy - Policies in use, the descriptions and contents

IAM Role - Compare role permissions over time

IAM User - Most active users

EC2

EC2 EBS Volume - Volumes in use, size, usage, and performance metrics

EC2 Instance - What instances are in which VPCs, instance type, IP address

EC2 Network Interface - Associated instance, type, DNS name

EC2 Subnet - CIDR block, number of addresses available

EC2 VPC - Account and region, by default

Account - View resources by account.

Lambda Function - Active functions, associated Log Group, invocation metrics

S3 Bucket - Buckets by account and region

CloudFront Distribution - Requests, errors, and other details about Distributions

Setup¶

Installation¶

You can use the AWS app located on the Apps page to install AWS integrations. Select either creating a connection using CloudFormation or Terraform. When you click Create connection, you then create a token to use with the data stream.

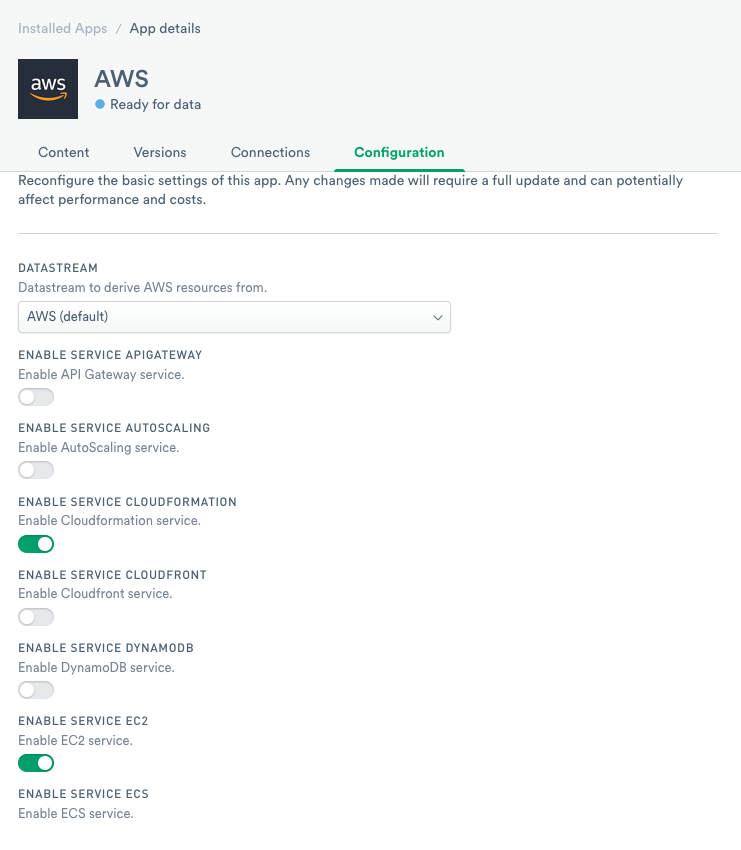

Before you create the connection, click Configuration and select the type of AWS integration from the extensive list.

Figure 1 - List of available AWS integrations

After you select the integration, follow the instructions to install the app. You must have an AWS account to install the app and the integrations.

As part of the installation, you create a Connection also called a Token. Use the following steps to create the connection:

Enter a name for the connection in the Name field.

Add Description. (optional)

Click Continue.

Click Copy to clipboard and save your token in a safe location.

Select I confirm I have copied this new token and stored it somewhere safe for future use..

Click Continue.

Follow the Setup instructions to create a CloudFormation stack.

Click Close.

You can view your new connection by navigating to the Datastreams page of the Observe interface.

Use the Observe CloudFormation template to automate installing the AWS integration. To install using the AWS Console, follow these steps:

Navigate to the CloudFormation console and view existing stacks.

Click Create stack. If prompted, select With new resources.

Under Specify template, select Amazon S3 URL.

In the Amazon S3 URL field, enter the URL for the Observe Collection CloudFormation template:

https://observeinc.s3-us-west-2.amazonaws.com/cloudformation/collection-latest.yaml.We recommend you pin the template version to a tagged version of the Observe AWS collection template.

To do this, replace

latestin the template URL with the desired version tag:https://observeinc.s3-us-west-2.amazonaws.com/cloudformation/collection-v0.2.0.yaml

For information about available versions, see the Observe collection CF template change log in GitHub.

Click Next to continue. You may be prompted to view the function in Designer.

Click Next again to skip.

In Stack name, provide a name for this stack. The name must be unique within a region and is used to name created resources.

Under Required Parameters, provide your Customer ID in ObserveCustomer and ingest token in ObserveToken. For details on creating an ingest token for a datastream, see Data streams

Click Next.

Under Configure stack options, there are no required options to configure. Click Next to continue.

Under Capabilities, check the box to acknowledge that this stack may create IAM resources.

Click Create stack

Video instructions

Alternatively, you can deploy the CloudFormation template using the awscli utility:

Caution

If you have multiple AWS profiles, make sure you configure the appropriate AWS_REGION and AWS_PROFILE environment variables in addition to

OBSERVE_CUSTOMER and OBSERVE_TOKEN.

$ curl -Lo collection.yaml https://observeinc.s3-us-west-2.amazonaws.com/cloudformation/collection-latest.yaml

$ aws cloudformation deploy --template-file ./collection.yaml \

--stack-name ObserveLambda \

--capabilities CAPABILITY_NAMED_IAM \

--parameter-overrides ObserveCustomer="${OBSERVE_CUSTOMER?}" ObserveToken="${OBSERVE_TOKEN?}"

If you want to automatically subscribe to existing and future CloudWatch Log Groups, see How do I send CloudWatch Log Groups to Observe? in the FAQ.

You can use our Terraform module to install the AWS integration and create the needed Kinesis Firehose delivery stream. The following is an example instantiation of this module:

module "observe_collection" {

source = "github.com/observeinc/terraform-aws-collection"

observe_customer = "${OBSERVE_CUSTOMER}"

observe_token = "${OBSERVE_TOKEN}"

}

Observe recommends that you pin the module version to the latest tagged version.

Uninstalling the AWS app¶

To uninstall the AWS app from your Observe workspace, follow the uninstall instructions located at Apps page.

To uninstall the Observe AWS collection stack using the AWS Console, follow these steps:

Navigate to the CloudFormation console and find the previously created Stack name.

Select the Stack name and click Delete. Then click Delete stack to delete the Observe Collections stack.

Alternatively, you can delete the CloudFormation stack using the awscli utility:

Caution

If you have multiple AWS profiles, make sure you configure the appropriate AWS_REGION and AWS_PROFILE environment variables.

$ aws cloudformation delete-stack --stack-name my-stack

Note that the relevant S3 buckets created by the stack are not deleted if the buckets contain data. If necessary, the buckets can be manually deleted by following bucket deletion instructions.

Remove the terraform-aws-collection module by running the following in the root directory:

$ terraform destroy

Note that the relevant S3 buckets are not deleted if the buckets contain data. If necessary, these can be manually deleted by following bucket deletion instructions

FAQ¶

What if the AWS integration fails?¶

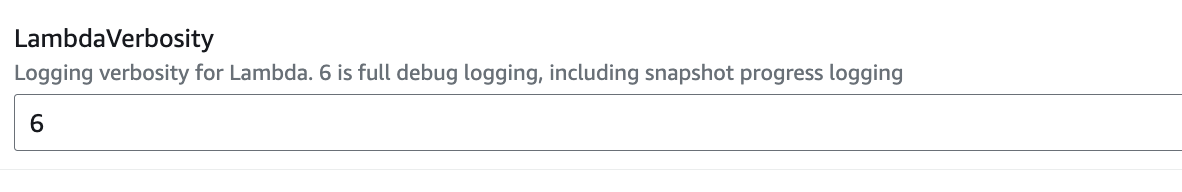

AWS integration may fail in some instances, and the most common cause may be related to the Observe Lambda forwarder. To understand why the Lambda forwarder fails, you can increase the logging verbosity for Lambda to 6.

Set LambdaVerbosity to 6 while creating the AWS CloudFormation stack:

Figure 5 - Setting Lambda Verbosity to 6 in the AWS Console

Override LambdaVerbosity in your cloudformation deploy command to 6:

curl -Lo collection.yaml https://observeinc.s3-us-west-2.amazonaws.com/cloudformation/collection-latest.yaml

aws cloudformation deploy --template-file ./collection.yaml \

--stack-name ObserveLambda \

--capabilities CAPABILITY_NAMED_IAM \

--parameter-overrides ObserveCustomer="${OBSERVE_CUSTOMER?}" ObserveToken="${OBSERVE_TOKEN?}" LambdaVerbosity=6

Override VERBOSITY in the observe_collection module:

module "observe_collection" {

source = "github.com/observeinc/terraform-aws-collection"

observe_customer = "${OBSERVE_CUSTOMER}"

observe_token = "${OBSERVE_TOKEN}"

lambda_envvars = { VERBOSITY = 6 }

}

You can find helpful logs under the /aws/lambda/<stack-name> log group (i.e. /aws/lambda/ObserveCollection) in CloudWatch Logs.

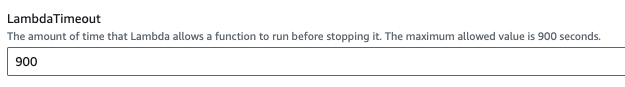

What if snapshot creation times out?¶

Lambda could time out while creating snapshots for various AWS services. You can increase LambdaTimeout to 900 or remove a problem AWS service from snapshot creation.

Setting the LambdaTimeout to 900

Set LambdaTimeout to 900 while creating the AWS CloudFormation stack:

Figure 5 - Setting LambdaTimeout to 900 in the AWS Console

Override LambdaTimeout in your cloudformation deploy command to 900:

curl -Lo collection.yaml https://observeinc.s3-us-west-2.amazonaws.com/cloudformation/collection-latest.yaml

aws cloudformation deploy --template-file ./collection.yaml \

--stack-name ObserveLambda \

--capabilities CAPABILITY_NAMED_IAM \

--parameter-overrides ObserveCustomer="${OBSERVE_CUSTOMER?}" ObserveToken="${OBSERVE_TOKEN?}" LambdaTimeout=900

Override `lambda_timeout` in the `observe_collection` module:

module "observe_collection" {

source = "github.com/observeinc/terraform-aws-collection"

observe_customer = "${OBSERVE_CUSTOMER}"

observe_token = "${OBSERVE_TOKEN}"

lambda_timeout = 900

}

Removing a problem AWS service from the snapshot creation

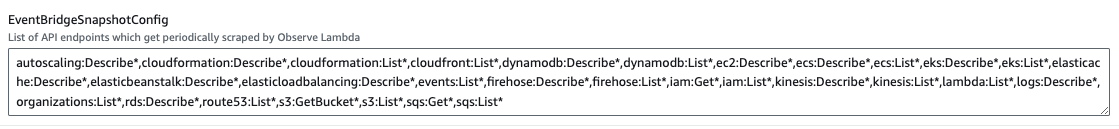

The following examples show how to remove AWS SNS from the snapshot creation.

If you have too many SNS topics, you could remove sns:Get* and sns:List* from EventBridgeSnapshotConfig

Figure 6 - Removing sns:Get* and sns:List* from EventBridgeSnapshotConfig

Override EventBridgeSnapshotConfig in your cloudformation deploy command to omitting sns:Get* and sns:List*:

curl -Lo collection.yaml https://observeinc.s3-us-west-2.amazonaws.com/cloudformation/collection-latest.yaml

aws cloudformation deploy --template-file ./collection.yaml \

--stack-name ObserveLambda \

--capabilities CAPABILITY_NAMED_IAM \

--parameter-overrides ObserveCustomer="${OBSERVE_CUSTOMER?}" ObserveToken="${OBSERVE_TOKEN?}" \

EventBridgeSnapshotConfig="autoscaling:Describe*,cloudformation:Describe*,cloudformation:List*,cloudfront:List*,dynamodb:Describe*,dynamodb:List*,ec2:Describe*,ecs:Describe*,ecs:List*,eks:Describe*,eks:List*,elasticache:Describe*,elasticbeanstalk:Describe*,elasticloadbalancing:Describe*,events:List*,firehose:Describe*,firehose:List*,iam:Get*,iam:List*,kinesis:Describe*,kinesis:List*,lambda:List*,logs:Describe*,organizations:List*,rds:Describe*,route53:List*,s3:GetBucket*,s3:List*,sqs:Get*,sqs:List*"

Set snapshot_exclude in the observe_collection module:

module "observe_collection" {

source = "github.com/observeinc/terraform-aws-collection"

observe_customer = ""

observe_token = ""

snapshot_exclude = ["sns:Get*","sns:List*"]

}

What configuration variables are available?¶

Documentation for configuration variables can be found here:

Where are the integration’s forwarders located?¶

The AWS Integration creates all resources in the region where you install the AWS Integration, such as us-east-1. They are named based on the CloudFormation stack name or Terraform module name you provided.

For example, a CloudFormation stack called Observe-AWS-Integration results in names such as these:

Lambda function

Observe-AWS-IntegrationS3 bucket

observe-aws-integration-bucket-1a2b3c4d5eKinesis Firehose delivery stream

Observe-AWS-Integration-Delivery-Stream-1a2b3c4d5e

Note

To ensure the generated resources comply with AWS naming rules, your stack or module name should contain only the following types of characters:

Letters (A-Z and a-z)

Numbers (0-9)

Hyphens (-)

Maximum of 30 characters

Collecting Data from Multiple Regions¶

The Observe AWS Integration operates on a per-region basis because some sources, such as CloudWatch metrics, are specific to a single region. For multiple regions, Observe recommends installing the integration in each region. You may do this with a CloudFormation StackSet, or by associating the Terraform module with your existing manifests.

For CloudFront, a global, not region-specific, service, installs the AWS Integration in us-east-1.

What permissions are required?¶

Installing the AWS integration requires allow permissions for the following actions on all resources (*):

cloudtrail:CreateTrail

cloudtrail:DeleteTrail

cloudtrail:PutEventSelectors

cloudtrail:StartLogging

cloudwatch:DeleteMetricStream

cloudwatch:GetMetricStream

cloudwatch:PutMetricStream

ec2:DescribeNetworkInterfaces

events:DeleteRule

events:DescribeRule

events:PutRule

events:PutTargets

events:RemoveTargets

firehose:CreateDeliveryStream

firehose:DeleteDeliveryStream

firehose:DescribeDeliveryStream

firehose:ListTagsForDeliveryStream

iam:AttachRolePolicy

iam:CreateRole

iam:DeleteRole

iam:DeleteRolePolicy

iam:DetachRolePolicy

iam:GetRole

iam:GetRolePolicy

iam:PassRole

iam:PutRolePolicy

lambda:AddPermission

lambda:CreateFunction

lambda:DeleteFunction

lambda:GetFunction

lambda:GetFunctionCodeSigningConfig

lambda:GetRuntimeManagementConfig

lambda:InvokeFunction

lambda:PutFunctionConcurrency

lambda:RemovePermission

logs:CreateLogGroup

logs:DeleteLogGroup

logs:DeleteSubscriptionFilter

logs:DescribeLogGroups

logs:DescribeSubscriptionFilters

logs:ListTagsLogGroup

logs:PutRetentionPolicy

logs:PutSubscriptionFilter

s3:CreateBucket

s3:DeleteBucketPolicy

s3:GetBucketPolicy

s3:GetObject

s3:PutBucketAcl

s3:PutBucketNotification

s3:PutBucketOwnershipControls

s3:PutBucketPolicy

s3:PutBucketPublicAccessBlock

s3:PutEncryptionConfiguration

s3:PutLifecycleConfiguration

The installation creates service roles for Lambda, Firehose, EventBridge, CloudWatch Logs, and MetricStream.

The Lambda Role has the following items:

The AWS-managed policies AWSLambdaBasicExecutionRole and AWSLambdaSQSQueueExecutionRole

A policy that allows it to query AWS APIs and collect resource records to send to Observe. The permissions for this policy are derived from the snapshot config and are always for all resources (*). The default is

autoscaling:Describe*

cloudformation:Describe*

cloudformation:List*

cloudfront:List*

dynamodb:Describe*

dynamodb:List*

ec2:Describe*

ecs:Describe*

ecs:List*

eks:Describe*

eks:List*

elasticache:Describe*

elasticbeanstalk:Describe*

elasticloadbalancing:Describe*

events:List*

firehose:Describe*

firehose:List*

iam:Get*

iam:List*

kinesis:Describe*

kinesis:List*

lambda:List*

logs:Describe*

organizations:List*

rds:Describe*

route53:List*

s3:GetBucket*

s3:List*

sns:Get*

sns:List*

sqs:Get*

sqs:List*

A policy that allows it to read from the bucket created during install

s3:Get*

s3:List*

The Service Role for Firehose has the following items:

A policy that allows

logs:CreateLogStreamandlogs:PutLogEventson the log group created for it during installation.A policy that allows on the bucket created during install:

s3:AbortMultipartUpload

s3:GetBucketLocation

s3:GetObject

s3:ListBucket

s3:ListBucketMultipartUploads

s3:PutObject

All have a policy that allows ‘firehose:PutRecord’ and ‘firehose:PutRecordBatch’ on the Firehose created during installation for delivering data to Observe.

How do I send CloudWatch Log Groups to Observe?¶

Subscribe all existing and future CloudWatch Log Groups to a delivery stream using the optional CloudFormation stack. You may do this at any time after installing the AWS integration. This stack subscribes Log Groups to the Kinesis Firehose delivery stream created for you by the AWS Integration.

Note

To subscribe to Log Groups using Terraform, specify the groups to subscribe in your observe_collection module configuration. For more information, see the Observe AWS Collection README in GitHub.

To subscribe CloudWatch Log Groups to your delivery stream:

Prerequisites¶

Before you begin, confirm you have the necessary prerequisites:

Complete your installation of the AWS Integration, if needed.

Locate the name of your AWS Integration CloudFormation stack. This is the name provided for Stack name when you installed the AWS Integration.

In the AWS console, go to CloudFormation to view your existing stacks.

Look for an Observe AWS Integration stack, such as Observe-AWS-Integration. Make a note of the name.

Deploy the subscribelogs CloudFormation Stack¶

Navigate to CloudFormation in the AWS console.

Click Create stack. If prompted, select With new resources.

Provide the template details:

Under Specify template, select Amazon S3 URL.

In the Amazon S3 URL field, enter the URL for the

subscribelogsCloudFormation template:https://observeinc.s3-us-west-2.amazonaws.com/cloudformation/subscribelogs-latest.yaml.

Observe recommends you pin the template version to a tagged version of the subscribelogs template.

To do this, replace latest in the template URL with the desired version tag:

https://observeinc.s3-us-west-2.amazonaws.com/cloudformation/subscribelogs-v0.3.0.yaml

For information about available versions, see the Observe collection CF template change log in GitHub.

Click Next to continue. You may be prompted to view the function in Designer.

Click Next again to skip.

Specify the stack details:

In Stack name, provide a name for this stack. It must be unique within a region, and used to name created resources.

Under Required Parameters, provide the name of your AWS Integration stack in CollectionStackName.

Configure optional parameters:

To restrict the automatically subscribed Log Groups, specify a regex pattern in LogGroupMatches. For example,

/aws/rds/.*subscribes RDS Log Groups. LogGroupMatches accepts a comma-separated list of regex patterns, so multiple patterns can be specified.

Click Next, and then Next again. There are no required options under Configure stack options.

Review your stack options:

Under Capabilities, check the box to acknowledge that this stack may create IAM resources.

Click Create stack.

The stack automatically subscribes to any matching CloudWatch Log Groups, even if you create them later. If you delete the stack, Amazon CloudWatch removes any created resources and unsubscribes from any Log Groups.

Note

A CloudWatch Log Group may be subscribed to a maximum of two delivery streams. If your desired Log Group is already subscribed to other delivery streams, you may remove one and then add it to your AWS Integration delivery stream by following the directions at Amazon CloudWatch Logs.

How do I get Canary artifacts generated by Canary Runs?¶

Each Canary run generates an artifact, a JSON file written to an S3 bucket. Create a bucket Event notification to tell the Observe Lambda function to send this file to Observe.

Specify the destination bucket when creating a Canary. If you do not provide a destination bucket, AWS creates one for you.

Prerequisites¶

The following information is required to configure an Event notification for Canary artifacts:

The name of your Observe Lambda forwarder function:

Complete your installation of the AWS Integration, if needed.

Locate the name of your AWS Integration CloudFormation stack. This is the name provided for Stack name when you installed the AWS Integration.

In the AWS console, go to CloudFormation to view your existing stacks.

Look for an Observe AWS Integration stack, such as Observe-AWS-Integration, and click on it.

Click on the Resources tab and locate the associated Lambda function.

Note the Physical ID.

Note the bucket where you store the Canary artifacts.

You configure the destination bucket when creating a Canary. If you did not provide a destination bucket, AWS creates one for you. Follow these steps to identify the name:

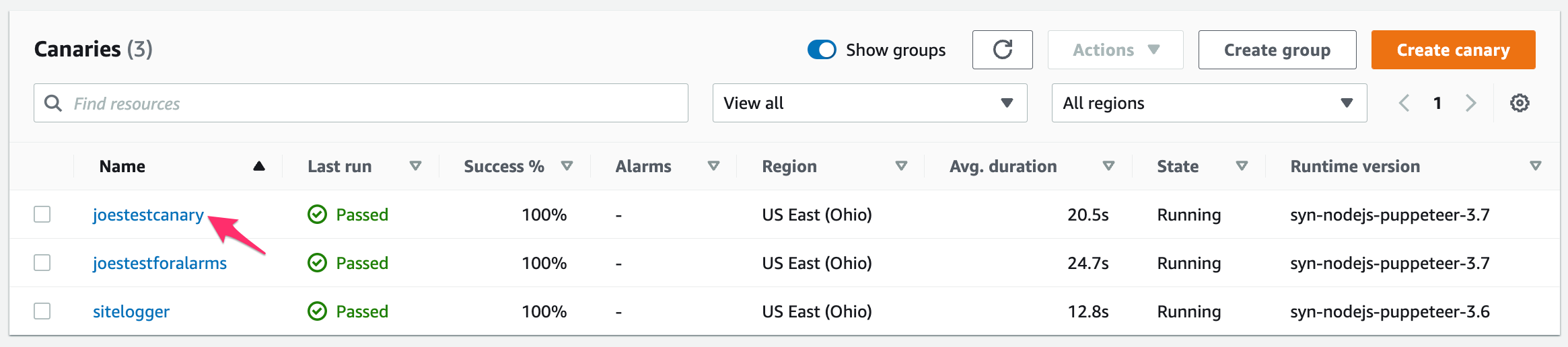

Go to CloudWatch > Synthetic Canaries.

Click on the name of your canary in the Name column

Figure 2 - Synthetics Canary Panel

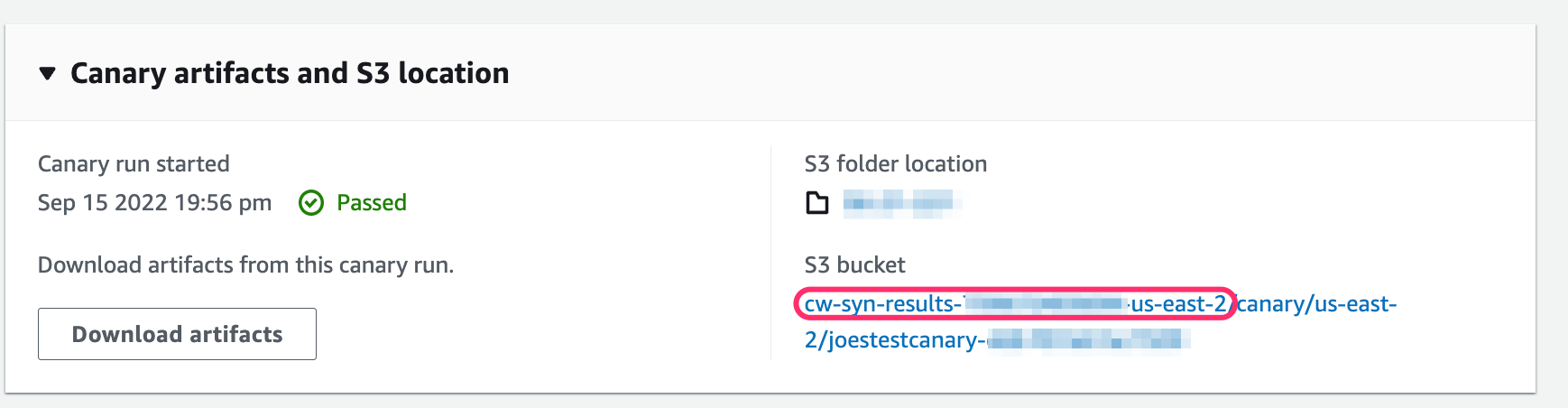

Scroll to the bottom of the Canaries page and expand the section Canary artifacts and S3 location - note the top level S3 bucket name

Figure 3 - Canary artifacts S3 location

Note

Click on the S3 bucket name to open the S3 bucket configuration which you need for creating the Event notification next.

Create the S3 Event Notification¶

This notification allows the Observe Lambda forwarder to identify when new Canary artifact files become available for ingestion. To create a notification, use the following steps:

Navigate to Buckets in the AWS Console.

Search for the top level S3 bucket (identified in the previous step) used for Canary data storage.

Click on the Properties tab.

Scroll down to Event Notifications.

Click on Create event Notification.

Configure the following information:

Event Name: the desired name of this event, such as “Canary artifact creation”

Suffix: .json

Event Types -> Object Creation: Check All object create events.

Destination -> Lambda function: Select your Observe Lambda function.

Click Save Changes.

Note

Be sure to add AllowS3Read permissions to your Lambda configuration. See Amazon S3.

How do I use an existing CloudTrail?¶

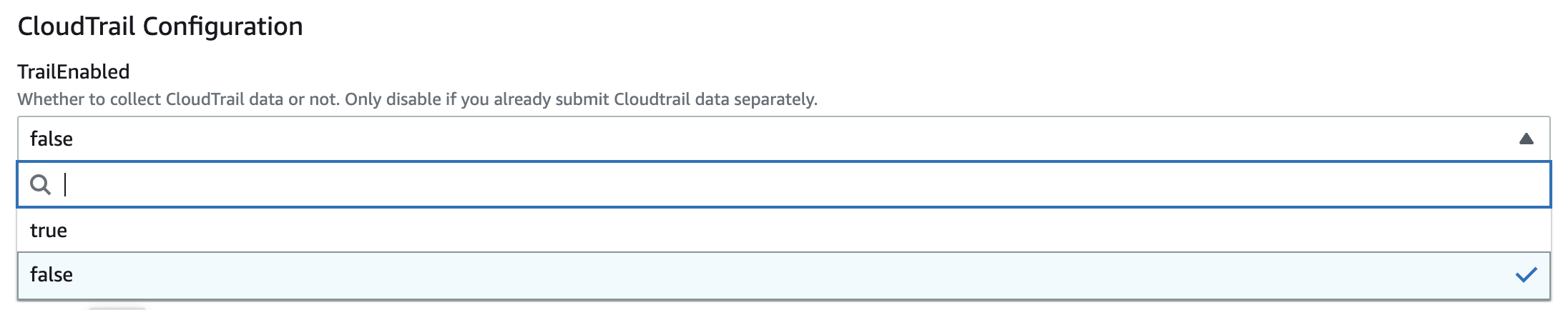

Installing the AWS app automatically creates a CloudTrail for you. To use an existing one instead, override the default value of TrailEnabled and set it to false.

You may do this in the AWS Console, with the AWS CLI, or with Terraform. Then subscribe your existing trail.

Set TrailEnabled to false:

Set TrailEnabled to false while creating the AWS App CloudFormation stack:

Figure 4 - Setting TrailEnabled to false in the AWS Console

Override TrailEnabled in your cloudformation deploy command:

```bash

curl -Lo collection.yaml https://observeinc.s3-us-west-2.amazonaws.com/cloudformation/collection-latest.yaml

aws cloudformation deploy --template-file ./collection.yaml \

--stack-name ObserveLambda \

--capabilities CAPABILITY_NAMED_IAM \

--parameter-overrides ObserveCustomer="${OBSERVE_CUSTOMER?}" ObserveToken="${OBSERVE_TOKEN?}" TrailEnabled=false

```

Override cloudtrail_enable in the observe_collection module:

module "observe_collection" {

source = "github.com/observeinc/terraform-aws-collection"

observe_customer = "${OBSERVE_CUSTOMER}"

observe_token = "${OBSERVE_TOKEN}"

cloudtrail_enable = false

}

Subscribe to a trail stored in S3 to the Lambda function

See Adding a Lambda trigger for a bucket to subscribe your existing trail, located in an S3 bucket, to the ObserveLambda function.

If more than one consumer needs to access this trail, instead of subscribing the bucket, use a “new object” notification to notify multiple consumers. For instructions, see Fanout to Lambda functions in the AWS documentation.

How do I reduce Amazon CloudWatch Metrics costs?¶

Reduce your Amazon CloudWatch metrics costs by performing the following steps:

Set the

cloudwatch_metrics_exclude_filtersTerraform variable to["*"]or theMetricsEnabledCloudFormation parameter tofalse.Use the CloudWatch Metrics Lambda Function to reduce the costs by using one of two options:

Reduce the polling frequency (

interval). For example, increasing the polling interval from the default polling frequency of 5 minutes to 10 minutes reduces costs by 50%.Reduce the number of metrics collected by specifying one or more filters. For example, reducing the number of metrics collected by 50% reduces costs by 50%.

How do I reduce AWS CloudTrail costs?¶

AWS CloudTrail costs can be reduced by disabling CloudTrail.

Currently TrailEnabled and cloudtrail_enable are used to control CloudTrail.

What if I use AWS Control Tower?¶

AWS Control Tower simplifies the AWS experiences by orchestrating multiple AWS services on your behalf while maintaining the security and compliance needs of your organization. Observe, Inc. provides a CloudFormation template that reads from your centralized Logging Account. Please contact your Observe Data Engineer for more detail.

How do I add other AWS services?¶

By default, Observe only collects from a subset of APIs:

autoscaling:Describe*

cloudformation:Describe*

cloudformation:List*

cloudfront:List*

dynamodb:Describe*

dynamodb:List

ec2:Describe*

ecs:Describe*

ecs:List*

eks:Describe*

eks:List*

elasticache:Describe*

elasticbeanstalk:Describe*

elasticloadbalancing:Describe*

events:List*,firehose:Describe*

firehose:List*

iam:Get*,iam:List*

kinesis:Describe*

kinesis:List*

lambda:List*

logs:Describe*

organizations:List*

rds:Describe*

route53:List*

s3:GetBucket*

s3:List*

To add or remove APIs from this list:

Go to

CloudFormationin the AWS consoleSelect the Observe Stack and click the

UpdatebuttonGo to the

ParameterstabAdd the required call to

EventBridgeSnapshotConfig(e.g.apigateway:GetDeployments)

Update the

snapshot_includevariable to include the required call (e.g.apigateway:GetDeployments)

module "observe_collection" {

source = "github.com/observeinc/terraform-aws-collection"

observe_customer = ""

observe_token = ""

snapshot_include = ["apigateway:GetDeployments"]

}

Run

terraform apply